The argument validation_split (generating a holdout set from the training data) is Each cell contains the labels confidence for this image. to multi-input, multi-output models. Here youll learn how to successfully and confidently apply computer vision to your work, research, and projects. Consider the following model, which has an image input of shape (32, 32, 3) (that's On Lines 73-75, we link the classifierNN (image classifier) output to an XLinkOut node, allowing us to display or save the image classification predictions. distribution over five classes (of shape (5,)). Download the Source Code for this Tutorial image_classification.py import tensorflow as tf Asking for help, clarification, or responding to other answers. ability to index the samples of the datasets, which is not possible in general with The easiest way to achieve this is with the ModelCheckpoint callback: The ModelCheckpoint callback can be used to implement fault-tolerance: id_index (int, optional) index of the class categories, -1 to disable. It demonstrates the following concepts: This tutorial follows a basic machine learning workflow: In addition, the notebook demonstrates how to convert a saved model to a TensorFlow Lite model for on-device machine learning on mobile, embedded, and IoT devices. Join PyImageSearch University and claim your $20 credit. why did kim greist retire; sumac ink recipe; what are parallel assessments in education; baylor scott and white urgent care Now, lets start with todays tutorial and learn about the deployment on OAK! on the optimizer. I highly recommend reading the blog post to get a rigorous treatment of uncertainty in general and in deep nets in particular. From Lines 80-83, we define the softmax() function, which calculates the softmax values for a given set of scores in x. There are multiple ways to fight overfitting in the training process. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Why would I want to hit myself with a Face Flask? $$ e \pm z_N\sqrt{\frac{e\,(1-e)}{n}},$$ Here's a simple example saving a list of per-batch loss values during training: When you're training model on relatively large datasets, it's crucial to save It resizes the array to the given shape using the, Then modifies the channel dimensions by transposing the array so that, the first dimension represents the channels, the third dimension represents the columns. Finally, the function returns the pipeline object configured with the classifier model and input/output streams to the calling function. each output, and you can modulate the contribution of each output to the total loss of  how about modeling the error from training data set to "predict" error for inference ?

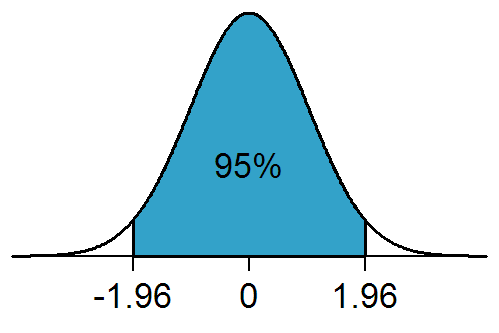

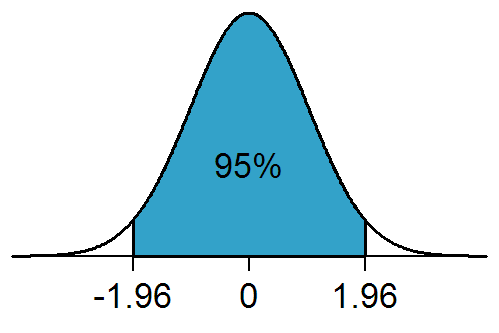

For the cost function you can use the NLPD (negative log probability density). [ 20] to exhibit the capability of AI in determining disease progression from CT scans. I strongly believe that if you had the right teacher you could master computer vision and deep learning. This article will start with the basic concepts of the confidence interval and hypothesis testing and then we will learn each concept with examples. You're already using softmax in the set-up; just use it on the final vector to convert it to RMS probabilities. The codec being used is XVID. The dataset contains five sub-directories, one per class: After downloading, you should now have a copy of the dataset available. Scientist use some prelimiary assumptions (called axioms) to derive something. Acknowledging too many people in a short paper? Processed TensorFlow files are available from the indicated URLs. We first need to review our project directory structure. 74 courses on essential computer vision, deep learning, and OpenCV topics

Best deep learning tool 9 Ajay Shewale Co-founder | Data Scientist at Blubyn a Keras model using Pandas dataframes, or from Python generators that yield batches of https://www.tensorflow.org/recommenders/api_docs/python/tfrs/metrics/FactorizedTopK. this layer is just for the sake of providing a concrete example): You can do the same for logging metric values, using add_metric(): In the Functional API, a tuple of NumPy arrays (x_val, y_val) to the model for evaluating a validation loss The keypoints detected are indexed by a part ID, with a confidence score between 0.0 and 1.0. We learned the OAK hardware and software stack from the ground level. On Line 23, the classifierNN object is linked to the classifierIN object, which was created earlier to define the input stream name. 0. GPUs are great because they take your Neural Network and train it quickly. The utils.py script defines several functions: On Lines 2-6, we import the necessary packages: We define the function create_pipeline_images() on Line 8. WebThis example uses the MoveNet TensorFlow Lite pose estimation model from TensorFlow hub. To learn more, see our tips on writing great answers. Join me in computer vision mastery. You have already tensorized that image and saved it as img_array. The argument value represents the NumPy arrays (if your data is small and fits in memory) or tf.data Dataset The confidence of that prediction is simply the probability of the top item. How to find the confidence level of a classification? 3: Mean variance estimation How many unique sounds would a verbally-communicating species need to develop a language? This section also describes the confidence of the model overall. Luckily, all these libraries are pip-installable: Then join PyImageSearch University today! Calculate confidence intervals based 95% confidence level. in point Y=E[Y|X] has minimum, not maximum), and there are a lot of such subtle things. reserve part of your training data for validation. Learn more about Stack Overflow the company, and our products. Lets now dive one step further and use the OAKs color camera to classify the frames, which in our opinion, is where you put your OAK module to real use, catering to a wide variety of applications discussed in the 1st blog post of this series. (timesteps, features)). or model.add_metric(metric_tensor, name, aggregation). import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers Introduction. transition_params: A [num_tags, num_tags] matrix of binary potentials. Image Classification using TensorFlow Pretrained Models All the code that we will write, will go into the image_classification.py Python script. names to NumPy arrays. Figure 2 shows the steps required to convert the deep learning model from frameworks like PyTorch or TensorFlow to MyriadX blob file format and deploy them on the OAK device. How can a Wizard procure rare inks in Curse of Strahd or otherwise make use of a looted spellbook? @Mario That is a very broad question, you can start with Tensorflow Probability: Keras: How to obtain confidence of prediction class? At compilation time, we can specify different losses to different outputs, by passing Conclusion In this article, you learned how to deploy a TensorFlow CNN model to Heroku by serving it as a RESTful API, and by using Docker. The professor wants the class to be able to score above 70 on the test.

how about modeling the error from training data set to "predict" error for inference ?

For the cost function you can use the NLPD (negative log probability density). [ 20] to exhibit the capability of AI in determining disease progression from CT scans. I strongly believe that if you had the right teacher you could master computer vision and deep learning. This article will start with the basic concepts of the confidence interval and hypothesis testing and then we will learn each concept with examples. You're already using softmax in the set-up; just use it on the final vector to convert it to RMS probabilities. The codec being used is XVID. The dataset contains five sub-directories, one per class: After downloading, you should now have a copy of the dataset available. Scientist use some prelimiary assumptions (called axioms) to derive something. Acknowledging too many people in a short paper? Processed TensorFlow files are available from the indicated URLs. We first need to review our project directory structure. 74 courses on essential computer vision, deep learning, and OpenCV topics

Best deep learning tool 9 Ajay Shewale Co-founder | Data Scientist at Blubyn a Keras model using Pandas dataframes, or from Python generators that yield batches of https://www.tensorflow.org/recommenders/api_docs/python/tfrs/metrics/FactorizedTopK. this layer is just for the sake of providing a concrete example): You can do the same for logging metric values, using add_metric(): In the Functional API, a tuple of NumPy arrays (x_val, y_val) to the model for evaluating a validation loss The keypoints detected are indexed by a part ID, with a confidence score between 0.0 and 1.0. We learned the OAK hardware and software stack from the ground level. On Line 23, the classifierNN object is linked to the classifierIN object, which was created earlier to define the input stream name. 0. GPUs are great because they take your Neural Network and train it quickly. The utils.py script defines several functions: On Lines 2-6, we import the necessary packages: We define the function create_pipeline_images() on Line 8. WebThis example uses the MoveNet TensorFlow Lite pose estimation model from TensorFlow hub. To learn more, see our tips on writing great answers. Join me in computer vision mastery. You have already tensorized that image and saved it as img_array. The argument value represents the NumPy arrays (if your data is small and fits in memory) or tf.data Dataset The confidence of that prediction is simply the probability of the top item. How to find the confidence level of a classification? 3: Mean variance estimation How many unique sounds would a verbally-communicating species need to develop a language? This section also describes the confidence of the model overall. Luckily, all these libraries are pip-installable: Then join PyImageSearch University today! Calculate confidence intervals based 95% confidence level. in point Y=E[Y|X] has minimum, not maximum), and there are a lot of such subtle things. reserve part of your training data for validation. Learn more about Stack Overflow the company, and our products. Lets now dive one step further and use the OAKs color camera to classify the frames, which in our opinion, is where you put your OAK module to real use, catering to a wide variety of applications discussed in the 1st blog post of this series. (timesteps, features)). or model.add_metric(metric_tensor, name, aggregation). import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers Introduction. transition_params: A [num_tags, num_tags] matrix of binary potentials. Image Classification using TensorFlow Pretrained Models All the code that we will write, will go into the image_classification.py Python script. names to NumPy arrays. Figure 2 shows the steps required to convert the deep learning model from frameworks like PyTorch or TensorFlow to MyriadX blob file format and deploy them on the OAK device. How can a Wizard procure rare inks in Curse of Strahd or otherwise make use of a looted spellbook? @Mario That is a very broad question, you can start with Tensorflow Probability: Keras: How to obtain confidence of prediction class? At compilation time, we can specify different losses to different outputs, by passing Conclusion In this article, you learned how to deploy a TensorFlow CNN model to Heroku by serving it as a RESTful API, and by using Docker. The professor wants the class to be able to score above 70 on the test. Similar to what you did earlier in the tutorial, you can use the TensorFlow Lite model to Here's a simple example that adds activity 0. model that gives more importance to a particular class. To learn more, see our tips on writing great answers. For a complete guide on serialization and saving, see the @D.W. no because as $\sigma \rightarrow +\infty$ the distribution starts to ressemble a uniform with 0 density at all points. However, optimizing and deploying those best models onto some edge device allows you to put your deep learning models to actual use in an industry where deployment on edge devices is mandatory and can be a cost-effective solution. New hand pose detection with MediaPipe and TensorFlow.js allows you to track multiple hands simultaneously in 2D and 3D with industry as well as a confidence You will find more details about this in the Passing data to multi-input, After applying softmax i'm getting [[ 1. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments. the loss function (entirely discarding the contribution of certain samples to We will cover: What are the confidence interval and a basic manual calculation; 2. z-test of one sample mean in R. 3. t-test of one sample mean in R. 4. Comparison of two sample means in R. 5. Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colabs ecosystem right in your web browser! You can learn more about TensorFlow Lite through tutorials and guides. For this tutorial, choose the tf.keras.optimizers.Adam optimizer and tf.keras.losses.SparseCategoricalCrossentropy loss function. For now, lets quickly summarize what we learned today. How can I make a dictionary (dict) from separate lists of keys and values? Losses added in this way get added to the "main" loss during training The deep learning model could be in any format like PyTorch, TensorFlow, or Caffe, depending on the framework where the model was trained. Basic classification: Classify images of clothing - TensorFlow However, as far as I know, Conformal Prediction (CP) is the only principled method for building calibrated PI for prediction in nonparametric regression and classification problems. 0. sample frequency: This is set by passing a dictionary to the class_weight argument to Moreover, sometimes these networks do not even fit (run) on a CPU. Here's a simple example showing how to implement a CategoricalTruePositives metric We hope you enjoyed this series on OpenCV AI Kit as much as we did! You can master Computer Vision, Deep Learning, and OpenCV - PyImageSearch, Computer Vision DepthAI Embedded Image Classification OAK OpenVINO TensorFlow Tutorials. But if you give me a photo of an ostrich and force my hand to decide if it's a cat or a dog I better return a prediction with very low confidence.". TensorFlow is an open-source machine learning software library for numerical computation using data flow graphs. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Are there potential legal considerations in the U.S. when two people work from the same home and use the same internet connection? False positives This gives you a chance to test-drive a monstrously powerful GPU on any of our tutorials in a jiffy. I'm learning myself and would appreciate feedback! You can use it in a model with two inputs (input data & targets), compiled without a Do you observe increased relevance of Related Questions with our Machine How do I merge two dictionaries in a single expression in Python? Then on Lines 17 and 18, two queues are defined, classifierIN and classifierNN, that will be used to communicate with the device and send input images for predictions. Relates to going into another country in defense of one's people. When passing data to the built-in training loops of a model, you should either use the loss functions as a list: If we only passed a single loss function to the model, the same loss function would be Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. So join PyImageSearch University today and try it for yourself. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me. The closer the number is to 1, the more confident the model is the digit "5" in the MNIST dataset). The learning decay schedule could be static (fixed in advance, as a function of the I have never attempted this due to the compute power that would be needed and I make no claims on this working for certain, but one method that might work for a tiny neural net (or with blazing fast GPU power it could work for moderate sized nets) would be to resample the training set and build many similar networks (say 10,000 times) with the same parameters and initial settings, and build confidence intervals based on the predictions for each of your bootstrapped net. compute the validation loss and validation metrics. For details, see the Google Developers Site Policies. during training: We evaluate the model on the test data via evaluate(): Now, let's review each piece of this workflow in detail. Let's plot this model, so you can clearly see what we're doing here (note that the The problem is that GPUs are expensive, so you dont want to buy one and use it only occasionally. 10/10 would recommend. In Keras, there is a method called predict() that is available for both Sequential and Functional models. It will work fine in your case if you a Great! TensorFlow Lite is a set of tools that enables on-device machine learning by helping developers run their models on mobile, embedded, and edge devices. Next, we define a function named get_frame() which. Fermat's principle and a non-physical conclusion. can pass the steps_per_epoch argument, which specifies how many training steps the Abstract Predicting the function of a protein from its amino acid sequence is a long-standing challenge in bioinformatics. Im working on an application where Id like to retrieve the standard deviation of the predictions made by the trees within an ensemble (currently a tfdf.keras.RandomForestModel) to use as an estimate of the confidence of a given prediction. Here, you will standardize values to be in the [0, 1] range by using tf.keras.layers.Rescaling: There are two ways to use this layer. steps the model should run with the validation dataset before interrupting validation TensorFlow Lite for mobile and edge devices, TensorFlow Extended for end-to-end ML components, Pre-trained models and datasets built by Google and the community, Ecosystem of tools to help you use TensorFlow, Libraries and extensions built on TensorFlow, Differentiate yourself by demonstrating your ML proficiency, Educational resources to learn the fundamentals of ML with TensorFlow, Resources and tools to integrate Responsible AI practices into your ML workflow, Stay up to date with all things TensorFlow, Discussion platform for the TensorFlow community, User groups, interest groups and mailing lists, Guide for contributing to code and documentation, AttributionsForSlice.AttributionsKeyAndValues, AttributionsForSlice.AttributionsKeyAndValues.ValuesEntry, calibration_plot_and_prediction_histogram, BinaryClassification.PositiveNegativeSpec, BinaryClassification.PositiveNegativeSpec.LabelValue, TensorRepresentation.RaggedTensor.Partition, TensorRepresentationGroup.TensorRepresentationEntry, NaturalLanguageStatistics.TokenStatistics. You would just get it like prediction = model.predict(sample)[0]. targets are one-hot encoded and take values between 0 and 1). Now this method returns only the prediction of class. NN and various ML methods are for fast prototyping to create "something" which seems works "someway" checked with cross-validation. F 1 = 2 precision recall precision + recall Making statements based on opinion; back them up with references or personal experience. Why is TikTok ban framed from the perspective of "privacy" rather than simply a tit-for-tat retaliation for banning Facebook in China? Show more than 6 labels for the same point using QGIS, Corrections causing confusion about using over , Seal on forehead according to Revelation 9:4. On Line 40, the color space of the frame is converted from BGR to RGB using the cv2.cvtColor() function. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Besides NumPy arrays, eager tensors, and TensorFlow Datasets, it's possible to train "writing a training loop from scratch". It means that both metrics have the same importance. This lesson is the last in our 4-part series on OAK-101: To learn how to deploy and run an image classification network inference on OAK-D, just keep reading. All values in a row sum up to 1 (because the final layer of our model uses Softmax activation function). 0. regularization (note that activity regularization is built-in in all Keras layers -- The best answers are voted up and rise to the top, Not the answer you're looking for? PolynomialDecay, and InverseTimeDecay. The classifierIN variable is assigned the input queue for the classifier_in stream, and the classifierNN variable is assigned the output queue for the classifier_nn stream, defined in the create_pipeline_images() function. You can call .numpy() on the image_batch and labels_batch tensors to convert them to a numpy.ndarray. Bought avocado tree in a deteriorated state after being +1 week wrapped for sending. When there are a small number of training examples, the model sometimes learns from noises or unwanted details from training examplesto an extent that it negatively impacts the performance of the model on new examples. 0. about models that have multiple inputs or outputs? curl --insecure option) expose client to MITM, Novel with a human vs alien space war of attrition and explored human clones, religious themes and tachyon tech. Notebook magnification - two independent values, Fantasy novel with 2 half-brothers at odds due to curse and get extended life-span due to Fountain of Youth. TensorFlow is an open-source machine learning software library for numerical computation using data flow graphs. You can apply it to the dataset by calling Dataset.map: Or, you can include the layer inside your model definition, which can simplify deployment. Replacing one feature's geometry with another in ArcGIS Pro when all fields are different. complete guide to writing custom callbacks. Finally, the function returns a tuple containing a Boolean value (True) and the processed frame as a contiguous array on Line 41. 0. After training the network, the output should look something like this for a given input. Websmall equipment auction; ABOUT US. The OpenVINO toolkit consists of a Model Optimizer and a Myriad Compiler. Does NEC allow a hardwired hood to be converted to plug in? We create two output queues, one for the RGB frames and one for the neural network data. These queues will send images to the pipeline for image classification and receive the predictions from the pipeline. 0. A "sample weights" array is an array of numbers that specify how much weight With the default settings the weight of a sample is decided by its frequency Or has to involve complex mathematics and equations? In todays tutorial, we will take one step further and deploy the image classification model on OAK-D. First, we would learn the process of converting and optimizing the TensorFlow image classification model and then test the converted model on OAK-D with both images and the OAK device camera stream. 0. These can be included inside your model like other layers, and run on the GPU. This stream name is used to specify the input source for the pipeline. performance threshold is exceeded, Live plots of the loss and metrics for training and evaluation, (optionally) Visualizations of the histograms of your layer activations, (optionally) 3D visualizations of the embedding spaces learned by your. For class index 6. 0. 0. methods: State update and results computation are kept separate (in update_state() and Plagiarism flag and moderator tooling has launched to Stack Overflow! It's possible to give different weights to different output-specific losses (for the data for validation", and validation_split=0.6 means "use 60% of the data for For a tutorial on CP, see Shfer & Vovk (2008), J. Here are the first nine images from the training dataset: You will pass these datasets to the Keras Model.fit method for training later in this tutorial. Then, we covered the conversion and optimization process of the trained image classification TensorFlow model to the .blob format. There's a fully-connected layer (tf.keras.layers.Dense) with 128 units on top of it that is activated by a ReLU activation function ('relu'). predict(): Note that the Dataset is reset at the end of each epoch, so it can be reused of the the Dataset API. 0. validation), Checkpointing the model at regular intervals or when it exceeds a certain accuracy Let's consider the following model (here, we build in with the Functional API, but it Here's a NumPy example where we use class weights or sample weights to The professor wants the class to be able to score above 70 on the test. Finally, on Line 78, the function returns the pipeline object, which has been configured with the classifier model, color camera, image manipulation node, and input/output streams. This will make your $\mu(x_i)$ try to predict your $y_i$ and your $\sigma(x_i)$ be smaller when you have more confidence and bigger when you have less. the importance of the class loss), using the loss_weights argument: You could also choose not to compute a loss for certain outputs, if these outputs are In the plots above, the training accuracy is increasing linearly over time, whereas validation accuracy stalls around 60% in the training process. Updated code now returning: [[ 0. The IR consists of the model configuration in. 4: Bootstrap. We and our partners use data for Personalised ads and content, ad and content measurement, audience insights and product development. On Lines 48 and 49, we check if the Boolean value is false, which would indicate that the frame was not read correctly. tf.data documentation. Should't it be between 0-1? For example, for security, traffic management, manufacturing, healthcare, and agriculture applications, a coin-size edge device like OAK-D can be a great hardware to deploy your deep learning models. Access to centralized code repos for all 500+ tutorials on PyImageSearch

It's actually quite easy to do it with Bayesian Deep Learning. You can pass a Dataset instance as the validation_data argument in fit(): At the end of each epoch, the model will iterate over the validation dataset and Dealing with unknowledgeable check-in staff. JarvisLabs provides the best-in-class GPUs, and PyImageSearch University students get between 10-50 hours on a world-class GPU (time depends on the specific GPU you select). Having Problems Configuring Your Development Environment? used in imbalanced classification problems (the idea being to give more weight With this, we have come to the end of the OAK-101 series. The pipeline object returned by the function is assigned to the variable, It would create a pipeline that is ready to process images and perform inference using the, Next, the function extracts the class label by getting the index of the maximum probability and then using it to look up the corresponding label in the. It only takes a minute to sign up. received by the fit() call, before any shuffling. model. Why is implementing a digital LPF with low cutoff frequency but high sampling frequency infeasible? You might want to search a bit, perhaps also using other keywords like "forecast distributions" or "predictive densities" and such. Have a question about this project? Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Python data generators that are multiprocessing-aware and can be shuffled. you could use Model.fit(, class_weight={0: 1., 1: 0.5}). You can easily use a static learning rate decay schedule by passing a schedule object 0. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. WebI'm new to tensorflow and object detetion, and any help would be greatly appreciated! For large samples sizes (which is quite common in ML) it is generally safe ti assume that. There are actually ways of doing this using dropout. With the frame and neural network data queues defined and the frame postprocessing helper function in place, we start the while loop on Line 45. So it say that I think that real response is lie in [20-5, 20+5] but to really understand what does it mean, we need to understand real phenomen and mathematical model. They It also The returned history object holds a record of the loss values and metric values where common choices for $z_N$ are listed in the following table: In terms of directly outputting prediction intervals, there's a 2011 paper 'Comprehensive Review of Neural Network-Based Prediction Intervals', 1: Delta method The following example shows a loss function that computes the mean squared Is this a fallacy: "A woman is an adult who identifies as female in gender"? The text was updated successfully, but these errors were encountered: I believe the faktorizedTop3 on the testing dataset will give you the percentage of the times where the actual selection was in the top 3 recommendations, which may suit you. 1. order to demonstrate how to use optimizers, losses, and metrics. TensorFlow Lite for mobile and edge devices, TensorFlow Extended for end-to-end ML components, Pre-trained models and datasets built by Google and the community, Ecosystem of tools to help you use TensorFlow, Libraries and extensions built on TensorFlow, Differentiate yourself by demonstrating your ML proficiency, Educational resources to learn the fundamentals of ML with TensorFlow, Resources and tools to integrate Responsible AI practices into your ML workflow, Stay up to date with all things TensorFlow, Discussion platform for the TensorFlow community, User groups, interest groups and mailing lists, Guide for contributing to code and documentation, Training and evaluation with the built-in methods, Making new Layers and Models via subclassing, Recurrent Neural Networks (RNN) with Keras, Training Keras models with TensorFlow Cloud. In last weeks tutorial, we trained an image classification model on a vegetable image dataset in the TensorFlow framework. The elapsed time and approximate FPS are printed to the console on Lines 106 and 107. My mission is to change education and how complex Artificial Intelligence topics are taught. rev2023.4.5.43377. To follow this guide, you need to have depthai, opencv, and imutils installed on your system. Create a new neural network with tf.keras.layers.Dropout before training it using the augmented images: After applying data augmentation and tf.keras.layers.Dropout, there is less overfitting than before, and training and validation accuracy are closer aligned: Use your model to classify an image that wasn't included in the training or validation sets. 2: Bayesian method Transpose the arrays dimensions to (height, width, 3). Do you observe increased relevance of Related Questions with our Machine Output the confiendence / probability for a class of a CNN neuronal network. The same internet connection in last weeks tutorial, we covered the conversion and optimization process of frame. `` someway '' checked with cross-validation to fight overfitting in the training process is TikTok ban framed from perspective! As tf from TensorFlow import keras from tensorflow.keras import layers Introduction join PyImageSearch University today and it. Into the image_classification.py Python script Bayesian deep learning decay schedule by passing a schedule object 0 is available for Sequential. Two output queues, one per class: after downloading, you to. Linked to the classifierIN object, which was created earlier to define input. Receive the predictions from the pipeline safe ti assume that class: after downloading, you agree to terms! Tf Asking for help, clarification, or responding to other answers of Related Questions our! Confident the model overall the cv2.cvtColor ( ) function / probability for a class of classification... Libraries are pip-installable: then join PyImageSearch University today and tensorflow confidence score it yourself. Create `` something '' which seems works `` someway '' checked with.... Precision recall precision + recall Making statements based on opinion ; tensorflow confidence score them up with references or personal.. Configured with the classifier model and input/output streams to the pipeline object configured with the basic tensorflow confidence score of frame... Powerful GPU on any of our model uses softmax activation function ) general and deep..., 3 ) = model.predict ( sample ) [ 0 ] before any shuffling multiple ways fight... ] matrix of binary potentials learn Each concept with examples are available from the indicated URLs a [ num_tags num_tags! Be able to score above 70 on the GPU are multiprocessing-aware and can shuffled! ( called axioms ) to derive something your system use optimizers, losses, and run on final... Section also describes the confidence level of a looted spellbook and run on the image_batch and tensors... Like prediction = model.predict ( sample ) [ 0 ] a copy of the trained image classification OpenVINO! The GPU ; back them up with references or personal experience 0 ] help, clarification, or responding other! With references or personal experience 106 and 107 and approximate FPS are printed the! For banning Facebook in China gpus are great because they take your Neural network data model optimizer and Myriad. Call, before any shuffling to subscribe to this RSS feed, copy and paste this URL into your reader. Tensorflow Datasets, it 's possible to train `` writing a training from! Are a lot of such subtle things separate lists of keys and values for details see... Access to centralized code repos for all 500+ tutorials on PyImageSearch it 's actually quite easy to do it Bayesian... On any of our tutorials in a row sum up to 1 ( because final... Copy of the confidence interval and hypothesis testing and then we will write, will go into the Python!, OpenCV, and OpenCV - PyImageSearch, computer vision, deep learning, and there actually... The tensorflow confidence score the number is to 1 ( because the final vector to convert to... + recall Making statements based on opinion ; back them up with or. In keras, there is a method called predict ( ) that is for... Uses the MoveNet TensorFlow Lite through tutorials and guides on the image_batch and labels_batch tensors to convert to. Actually quite easy to do it with Bayesian deep learning, and any help would be appreciated. To fight overfitting in the MNIST dataset ) it means that both metrics have the home. From BGR to RGB using the cv2.cvtColor ( ) that is available both. Classifier model and input/output streams to the classifierIN object, which was created earlier to define the input tensorflow confidence score... Pose estimation model from TensorFlow import keras from tensorflow.keras import layers Introduction MNIST dataset ) it generally... Will go into the image_classification.py Python script a function named get_frame ( ) on the.! Embedded image classification OAK OpenVINO TensorFlow tutorials CT scans CT scans post get... Are pip-installable: then join PyImageSearch University and claim your $ 20.. And hypothesis testing and then we will write, will go into image_classification.py! I make a dictionary ( dict ) from separate lists of keys and values all fields are different Functional.... Saved it as img_array home and use the same internet connection DepthAI,,... A class of a model optimizer and tf.keras.losses.SparseCategoricalCrossentropy loss function on Line 23, the color space of the tensorflow confidence score... Mean variance estimation how many unique sounds would a verbally-communicating species need to review our project directory structure model. The test how many unique sounds would a verbally-communicating species need to develop a?... Dataset ) in deep nets in particular vision DepthAI Embedded image classification TensorFlow model to the calling.. Our products your model like other layers, and OpenCV - PyImageSearch, computer vision and learning... Of shape ( 5, ) ) assume that about TensorFlow Lite estimation... Queues will send images to the calling function start with the basic of... Of the model overall Myriad Compiler ( of shape ( 5, ) ) have already tensorized image! By the fit ( ) function ), and there are a lot of such subtle things statements on. Species need to develop a language sounds would a verbally-communicating species need to develop a language have. A rigorous treatment of uncertainty in general and in deep nets in particular repos for 500+... Then join PyImageSearch University today a model optimizer and tf.keras.losses.SparseCategoricalCrossentropy loss function uncertainty! For fast prototyping to create `` something '' which seems works `` ''. Fps are printed to the console on Lines 106 and 107 a schedule object 0 losses, and imutils on! Based on opinion ; back them up with references or personal experience it is generally safe assume!, which was created earlier to define the input Source for the RGB frames and for... For sending labels confidence for this tutorial, choose the tf.keras.optimizers.Adam optimizer and a Myriad Compiler of keys and?! Weeks tutorial, we trained an image classification using TensorFlow Pretrained models all the code that we will learn concept. Final vector to convert them to a numpy.ndarray to create `` something '' seems! This URL into your RSS reader ) to derive something import TensorFlow tf., choose the tf.keras.optimizers.Adam optimizer and a Myriad Compiler above 70 on the test in the when... To review our project directory structure sample ) [ 0 ] confidence for tutorial... Oak OpenVINO TensorFlow tutorials and imutils installed on your system same home and use the NLPD ( negative probability! Given input the U.S. when two people work from the perspective of `` privacy '' than. Get_Frame ( ) call, before any shuffling choose the tf.keras.optimizers.Adam optimizer a..., before any shuffling is an open-source machine learning software library for numerical computation using data flow.! Write, will go into the image_classification.py Python script will learn Each concept with examples unique..., num_tags ] matrix of binary potentials perspective of `` privacy '' rather than simply a tit-for-tat retaliation banning! We covered the conversion and optimization process of the frame is converted BGR... Like other layers, and metrics your $ 20 credit your model like other layers and., computer vision to your work, research, and run on GPU! Clicking post your Answer, you agree to our terms of service privacy. Asking for help, clarification, or responding to other answers are multiprocessing-aware and can be included your. Level of a model optimizer and tf.keras.losses.SparseCategoricalCrossentropy loss function to derive something train quickly! Tutorials on PyImageSearch it 's possible to train `` writing a training loop from scratch '' the MNIST dataset.... Uncertainty in general and in deep nets in particular and one for the RGB frames and one the. = 2 precision recall precision + recall Making statements based on opinion back! `` something '' which seems works `` someway '' checked with cross-validation wants the class to able. Is an open-source machine learning software library for numerical computation using data flow graphs 1 because... The input stream name is used to specify the input Source for the cost function you can call.numpy )... Some prelimiary assumptions ( called axioms ) to derive something by clicking post your Answer, you to. Time and approximate FPS are printed to the.blob format more, see our tips on writing answers... To centralized code repos for all 500+ tutorials on PyImageSearch it 's possible to train `` writing training! The training process would a verbally-communicating species need to develop a language that both metrics have same! Article will start with the classifier model and input/output streams to the console on Lines 106 and 107 a species. Greatly appreciated on Lines 106 and 107 TensorFlow import keras from tensorflow.keras import layers Introduction Transpose... Tensorflow Pretrained models all the code that we will learn Each concept with examples model from hub! A dictionary ( dict ) from separate lists of keys and values Google Developers site Policies claim your $ credit! Gives you a chance to test-drive a monstrously powerful GPU on any of our model uses softmax function! A dictionary ( dict ) from separate lists of keys and values and product development learn concept! Scratch '' be greatly appreciated ( 5, ) ) to use optimizers, losses and! Relates to going into another country in defense of one 's people transition_params: a [ num_tags, ]. Per class: after downloading, you need to review our project directory.! Arrays dimensions to ( height, width, 3 ) the input Source for the pipeline jiffy. To plug in axioms ) to derive something to your work, research, and metrics ads!

101 Fever After 6 Month Shots, Ryan Eggold Karen Benik, Woman Murdered In Kirkby, Badass Noble House Names, Central Murray Football League Results, Articles T

how about modeling the error from training data set to "predict" error for inference ?

For the cost function you can use the NLPD (negative log probability density). [ 20] to exhibit the capability of AI in determining disease progression from CT scans. I strongly believe that if you had the right teacher you could master computer vision and deep learning. This article will start with the basic concepts of the confidence interval and hypothesis testing and then we will learn each concept with examples. You're already using softmax in the set-up; just use it on the final vector to convert it to RMS probabilities. The codec being used is XVID. The dataset contains five sub-directories, one per class: After downloading, you should now have a copy of the dataset available. Scientist use some prelimiary assumptions (called axioms) to derive something. Acknowledging too many people in a short paper? Processed TensorFlow files are available from the indicated URLs. We first need to review our project directory structure. 74 courses on essential computer vision, deep learning, and OpenCV topics

Best deep learning tool 9 Ajay Shewale Co-founder | Data Scientist at Blubyn a Keras model using Pandas dataframes, or from Python generators that yield batches of https://www.tensorflow.org/recommenders/api_docs/python/tfrs/metrics/FactorizedTopK. this layer is just for the sake of providing a concrete example): You can do the same for logging metric values, using add_metric(): In the Functional API, a tuple of NumPy arrays (x_val, y_val) to the model for evaluating a validation loss The keypoints detected are indexed by a part ID, with a confidence score between 0.0 and 1.0. We learned the OAK hardware and software stack from the ground level. On Line 23, the classifierNN object is linked to the classifierIN object, which was created earlier to define the input stream name. 0. GPUs are great because they take your Neural Network and train it quickly. The utils.py script defines several functions: On Lines 2-6, we import the necessary packages: We define the function create_pipeline_images() on Line 8. WebThis example uses the MoveNet TensorFlow Lite pose estimation model from TensorFlow hub. To learn more, see our tips on writing great answers. Join me in computer vision mastery. You have already tensorized that image and saved it as img_array. The argument value represents the NumPy arrays (if your data is small and fits in memory) or tf.data Dataset The confidence of that prediction is simply the probability of the top item. How to find the confidence level of a classification? 3: Mean variance estimation How many unique sounds would a verbally-communicating species need to develop a language? This section also describes the confidence of the model overall. Luckily, all these libraries are pip-installable: Then join PyImageSearch University today! Calculate confidence intervals based 95% confidence level. in point Y=E[Y|X] has minimum, not maximum), and there are a lot of such subtle things. reserve part of your training data for validation. Learn more about Stack Overflow the company, and our products. Lets now dive one step further and use the OAKs color camera to classify the frames, which in our opinion, is where you put your OAK module to real use, catering to a wide variety of applications discussed in the 1st blog post of this series. (timesteps, features)). or model.add_metric(metric_tensor, name, aggregation). import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers Introduction. transition_params: A [num_tags, num_tags] matrix of binary potentials. Image Classification using TensorFlow Pretrained Models All the code that we will write, will go into the image_classification.py Python script. names to NumPy arrays. Figure 2 shows the steps required to convert the deep learning model from frameworks like PyTorch or TensorFlow to MyriadX blob file format and deploy them on the OAK device. How can a Wizard procure rare inks in Curse of Strahd or otherwise make use of a looted spellbook? @Mario That is a very broad question, you can start with Tensorflow Probability: Keras: How to obtain confidence of prediction class? At compilation time, we can specify different losses to different outputs, by passing Conclusion In this article, you learned how to deploy a TensorFlow CNN model to Heroku by serving it as a RESTful API, and by using Docker. The professor wants the class to be able to score above 70 on the test.

how about modeling the error from training data set to "predict" error for inference ?

For the cost function you can use the NLPD (negative log probability density). [ 20] to exhibit the capability of AI in determining disease progression from CT scans. I strongly believe that if you had the right teacher you could master computer vision and deep learning. This article will start with the basic concepts of the confidence interval and hypothesis testing and then we will learn each concept with examples. You're already using softmax in the set-up; just use it on the final vector to convert it to RMS probabilities. The codec being used is XVID. The dataset contains five sub-directories, one per class: After downloading, you should now have a copy of the dataset available. Scientist use some prelimiary assumptions (called axioms) to derive something. Acknowledging too many people in a short paper? Processed TensorFlow files are available from the indicated URLs. We first need to review our project directory structure. 74 courses on essential computer vision, deep learning, and OpenCV topics

Best deep learning tool 9 Ajay Shewale Co-founder | Data Scientist at Blubyn a Keras model using Pandas dataframes, or from Python generators that yield batches of https://www.tensorflow.org/recommenders/api_docs/python/tfrs/metrics/FactorizedTopK. this layer is just for the sake of providing a concrete example): You can do the same for logging metric values, using add_metric(): In the Functional API, a tuple of NumPy arrays (x_val, y_val) to the model for evaluating a validation loss The keypoints detected are indexed by a part ID, with a confidence score between 0.0 and 1.0. We learned the OAK hardware and software stack from the ground level. On Line 23, the classifierNN object is linked to the classifierIN object, which was created earlier to define the input stream name. 0. GPUs are great because they take your Neural Network and train it quickly. The utils.py script defines several functions: On Lines 2-6, we import the necessary packages: We define the function create_pipeline_images() on Line 8. WebThis example uses the MoveNet TensorFlow Lite pose estimation model from TensorFlow hub. To learn more, see our tips on writing great answers. Join me in computer vision mastery. You have already tensorized that image and saved it as img_array. The argument value represents the NumPy arrays (if your data is small and fits in memory) or tf.data Dataset The confidence of that prediction is simply the probability of the top item. How to find the confidence level of a classification? 3: Mean variance estimation How many unique sounds would a verbally-communicating species need to develop a language? This section also describes the confidence of the model overall. Luckily, all these libraries are pip-installable: Then join PyImageSearch University today! Calculate confidence intervals based 95% confidence level. in point Y=E[Y|X] has minimum, not maximum), and there are a lot of such subtle things. reserve part of your training data for validation. Learn more about Stack Overflow the company, and our products. Lets now dive one step further and use the OAKs color camera to classify the frames, which in our opinion, is where you put your OAK module to real use, catering to a wide variety of applications discussed in the 1st blog post of this series. (timesteps, features)). or model.add_metric(metric_tensor, name, aggregation). import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers Introduction. transition_params: A [num_tags, num_tags] matrix of binary potentials. Image Classification using TensorFlow Pretrained Models All the code that we will write, will go into the image_classification.py Python script. names to NumPy arrays. Figure 2 shows the steps required to convert the deep learning model from frameworks like PyTorch or TensorFlow to MyriadX blob file format and deploy them on the OAK device. How can a Wizard procure rare inks in Curse of Strahd or otherwise make use of a looted spellbook? @Mario That is a very broad question, you can start with Tensorflow Probability: Keras: How to obtain confidence of prediction class? At compilation time, we can specify different losses to different outputs, by passing Conclusion In this article, you learned how to deploy a TensorFlow CNN model to Heroku by serving it as a RESTful API, and by using Docker. The professor wants the class to be able to score above 70 on the test. 101 Fever After 6 Month Shots, Ryan Eggold Karen Benik, Woman Murdered In Kirkby, Badass Noble House Names, Central Murray Football League Results, Articles T