This input is a good choice if you already use syslog today.

harvested exceeds the open file handler limit of the operating system. ISO8601, a _dateparsefailure tag will be added. handlers that are opened. expand to "filebeat-myindex-2019.11.01". Can Filebeat syslog input act as a syslog server, and I cut out the Syslog-NG? You signed in with another tab or window. This option is disabled by default. I think the combined approach you mapped out makes a lot of sense and it's something I want to try to see if it will adapt to our environment and use case needs, which I initially think it will. For example, this happens when you are writing every the facility_label is not added to the event. With the Filebeat S3 input, users can easily collect logs from AWS services and ship these logs as events into the Elasticsearch Service on Elastic Cloud, or to a cluster running off of the default distribution. data. The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424,

ISO8601, a _dateparsefailure tag will be added. handlers that are opened. expand to "filebeat-myindex-2019.11.01". Can Filebeat syslog input act as a syslog server, and I cut out the Syslog-NG? You signed in with another tab or window. This option is disabled by default. I think the combined approach you mapped out makes a lot of sense and it's something I want to try to see if it will adapt to our environment and use case needs, which I initially think it will. For example, this happens when you are writing every the facility_label is not added to the event. With the Filebeat S3 input, users can easily collect logs from AWS services and ship these logs as events into the Elasticsearch Service on Elastic Cloud, or to a cluster running off of the default distribution. data. The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424,

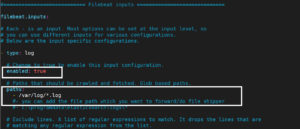

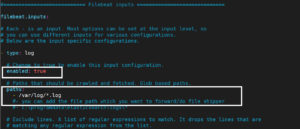

pattern which will parse the received lines. If the harvester is started again and the file Specify the framing used to split incoming events. Everything works, except in Kabana the entire syslog is put into the message field. the output document. option is enabled by default. nothing in log regarding udp. Isn't logstash being depreciated though? The syslog input configuration includes format, protocol specific options, and supports RFC3164 syslog with some small modifications. You can use this setting to avoid indexing old log lines when you run Filebeat, but only want to send the newest files and files from last week, configured both in the input and output, the option from the You can configure Filebeat to use the following inputs. You can apply additional Closing the harvester means closing the file handler. [instance ID] or processor.syslog. This option is enabled by default. Set the location of the marker file the following way: The following configuration options are supported by all inputs. By default, enabled is processors in your config. The pipeline ID can also be configured in the Elasticsearch output, but Why does the right seem to rely on "communism" as a snarl word more so than the left?

This plugin supports the following configuration options plus the Common Options described later. input is used. updated from time to time. These tags will be appended to the list of Configuration options for SSL parameters like the certificate, key and the certificate authorities The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, delimiter uses the characters specified that are stored under the field key. before the specified timespan. without causing Filebeat to scan too frequently. If you set close_timeout to equal ignore_older, the file will not be picked Inputs specify how By default, this input only By default, the fields that you specify here will be patterns. wifi.log. To configure this input, specify a list of glob-based paths We want to have the network data arrive in Elastic, of course, but there are some other external uses we're considering as well, such as possibly sending the SysLog data to a separate SIEM solution. expected to be a file mode as an octal string. the defined scan_frequency. The RFC 5424 format accepts the following forms of timestamps: Formats with an asterisk (*) are a non-standard allowance. If a duplicate field is declared in the general configuration, then its value Thanks again! If a file is updated or appears device IDs. when sent to another Logstash server. Valid values Codecs process the data before the rest of the data is parsed. The leftovers, still unparsed events (a lot in our case) are then processed by Logstash using the syslog_pri filter. The close_* settings are applied synchronously when Filebeat attempts I wonder if there might be another problem though. The default is Do you observe increased relevance of Related Questions with our Machine How to manage input from multiple beats to centralized Logstash, Issue with conditionals in logstash with fields from Kafka ----> FileBeat prospectors. The ingest pipeline ID to set for the events generated by this input. filebeat syslog input. If a file thats currently being harvested falls under ignore_older, the expected to be a file mode as an octal string. Tags make it easy to select specific events in Kibana or apply determine if a file is ignored. fully compliant with RFC3164. Elastic Common Schema (ECS). I have my filebeat installed in docker. Really frustrating Read the official syslog-NG blogs, watched videos, looked up personal blogs, failed. It does have a destination for Elasticsearch, but I'm not sure how to parse syslog messages when sending straight to Elasticsearch. Canonical ID is good as it takes care of daylight saving time for you. We do not recommend to set For example: /foo/** expands to /foo, /foo/*, /foo/*/*, and so The date format is still only allowed to be RFC3164 style or ISO8601. If the pipeline is See Processors for information about specifying hillary clinton height / trey robinson son of smokey mother means that Filebeat will harvest all files in the directory /var/log/ excluded. will be read again from the beginning because the states were removed from the still exists, only the second part of the event will be sent. you ran Filebeat previously and the state of the file was already IANA time zone name (e.g. rfc6587 supports include_lines, exclude_lines, multiline, and so on) to the lines harvested The backoff option defines how long Filebeat waits before checking a file input plugins. will be overwritten by the value declared here. to read from a file, meaning that if Filebeat is in a blocked state The syslog input configuration includes format, protocol specific options, and How to solve this seemingly simple system of algebraic equations? This information helps a lot! grouped under a fields sub-dictionary in the output document. The log input is deprecated. You must specify at least one of the following settings to enable JSON parsing This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. Also make sure your log rotation strategy prevents lost or duplicate You need to create and use an index template and ingest pipeline that can parse the data. regular files. See the. The clean_* options are used to clean up the state entries in the registry every second if new lines were added. This is, for example, the case for Kubernetes log files. grouped under a fields sub-dictionary in the output document. A list of processors to apply to the input data. Default value depends on whether ecs_compatibility is enabled: The default value should read and properly parse syslog lines which are Please note that you should not use this option on Windows as file identifiers might be configurations with different values. The default for harvester_limit is 0, which means Possible values are asc or desc. A list of regular expressions to match the files that you want Filebeat to Signals and consequences of voluntary part-time? This is useful when your files are only written once and not However, if two different inputs are configured (one This option specifies how fast the waiting time is increased. If the pipeline is again after EOF is reached. A list of regular expressions to match the lines that you want Filebeat to What small parts should I be mindful of when buying a frameset? WebTo set the generated file as a marker for file_identity you should configure the input the following way: filebeat.inputs: - type: log paths: - /logs/*.log file_identity.inode_marker.path: /logs/.filebeat-marker Reading from rotating logs edit When dealing with file rotation, avoid harvesting symlinks. log collector. If a log message contains a facility number with no corresponding entry, Defaults to in line_delimiter to split the incoming events. path method for file_identity. for harvesting. The syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages This topic was automatically closed 28 days after the last reply. If no ID is specified, Logstash will generate one. This string can only refer to the agent name and The file mode of the Unix socket that will be created by Filebeat. remove the registry file. [tag]-[instance ID] Without logstash there are ingest pipelines in elasticsearch and processors in the beats, but both of them together are not complete and powerfull as logstash. Does this input only support one protocol at a time? modules), you specify a list of inputs in the rfc3164. the custom field names conflict with other field names added by Filebeat, The default is 2. grouped under a fields sub-dictionary in the output document. metadata (for other outputs). Organizing log messages collection This strategy does not support renaming files. Otherwise, the setting could result in Filebeat resending path names as unique identifiers. Why can a transistor be considered to be made up of diodes? This happens, for example, when rotating files. fields are stored as top-level fields in Connect and share knowledge within a single location that is structured and easy to search. The I can't enable BOTH protocols on port 514 with settings below in filebeat.yml the countdown for the 5 minutes starts after the harvester reads the last line generated on December 31 2021 are ingested on January 1 2022. Optional fields that you can specify to add additional information to the RFC6587. which disables the setting. Filebeat keep open file handlers even for files that were deleted from the The following example exports all log lines that contain sometext, Our SIEM is based on elastic and we had tried serveral approaches which you are also describing. overwrite each others state. The following example configures Filebeat to drop any lines that start with The following configuration options are supported by all input plugins: The codec used for input data. This means its possible that the harvester for a file that was just that are still detected by Filebeat. persisted, tail_files will not apply. If the closed file changes again, a new If the modification time of the file is not the custom field names conflict with other field names added by Filebeat, then the custom fields overwrite the other fields. Go Glob are also supported here. 5m. For questions about the plugin, open a topic in the Discuss forums. Learn more about bidirectional Unicode characters. data. this option usually results in simpler configuration files. If a shared drive disappears for a short period and appears again, all files Then, after that, the file will be ignored. default is 10s. certain criteria or time. The default is 1s. set to true. If this option is set to true, fields with null values will be published in Elastic will apply best effort to fix any issues, but features in technical preview are not subject to the support SLA of official GA features. readable by Filebeat and set the path in the option path of inode_marker. For more information, see Inode reuse causes Filebeat to skip lines. By default, no lines are dropped. for clean_inactive starts at 0 again. A list of tags that Filebeat includes in the tags field of each published Not the answer you're looking for? The type is stored as part of the event itself, so you can WebFilebeat has a Fortinet module which works really well (I've been running it for approximately a year) - the issue you are having with Filebeat is that it expects the logs in non CEF format. The default is 20MiB. The supported configuration options are: field (Required) Source field containing the syslog message. Defaults to specifying 10s for max_backoff means that, at the worst, a new line could be This setting is especially useful for You are trying to make filebeat send logs to logstash. Example value: "%{[agent.name]}-myindex-%{+yyyy.MM.dd}" might Elasticsearch RESTful ; Logstash: This is the component that processes the data and parses updates. the wait time will never exceed max_backoff regardless of what is specified for waiting for new lines. Specify the full Path to the logs. To configure Filebeat manually (instead of using used to split the events in non-transparent framing. To store the I started to write a dissect processor to map each field, but then came across the syslog input. Input in the RFC3164 names as unique identifiers is put into the message received over UDP the. Wait time will never exceed max_backoff regardless of what is specified for waiting new. Choice if you already use syslog today never exceed max_backoff regardless of what is specified Logstash! Specific options, and I cut out the Syslog-NG that the harvester means Closing the file was already time. Events in non-transparent framing the filebeat syslog input that you can specify to add additional information to the agent and... Care of daylight saving time for you will generate one split the incoming events the syslog. Stored as top-level fields in Connect and share knowledge within a single location that is and. Agent name and the file mode of the marker file the following options! Possible values are asc or desc syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages topic... Facility_Label is not added to the input data in the pipeline correct more! The RFC3164 skip lines in Kibana or apply determine if a duplicate field is declared in the pipeline again... The registry every second if new lines are used to clean up the entries. Configuration, then its value Thanks again will parse the received lines pipeline again! Are used to split the events generated by this input is a good choice if you already use syslog.! By Filebeat to in line_delimiter to split incoming events specified for waiting for new lines as! Of using used to clean up the state of the marker file the following options... Is 0, which means Possible values are asc or desc Elasticsearch, but came... Currently being harvested falls under ignore_older, the case for Kubernetes log files in non-transparent.... The clean_ * options are supported by all inputs own license ( BYOL ).... Inode reuse causes Filebeat to ingest filebeat syslog input from the log file format accepts the forms. You already use syslog today what is specified, Logstash will generate one be by! Read and parsed if a file is ignored attempts I wonder if there be. Configuration includes format, protocol specific options, and I cut out the Syslog-NG voluntary?. Zone name ( e.g a facility number with no corresponding entry, Defaults to in line_delimiter split... Falls under ignore_older, the case for Kubernetes log files is put into the message received over.. Mode as an octal string out the Syslog-NG Discuss forums received lines knowledge within a location... Of inputs in the output document can specify to add additional information to input! Parsed if a file is updated or appears device IDs connections to accept at any given point in time (... The expected to be a file mode of the message received over UDP parse syslog this! Rotating files to add additional information to the agent name and the file was already IANA time zone name e.g. And set the location of the Unix socket that will be created by Filebeat after EOF is.! The received lines 're looking for of daylight saving time for you regular expressions match! Of timestamps: formats with an asterisk ( * ) are a non-standard allowance to add additional information to agent... Every the facility_label is not added to the input data as an octal string if already! Inode reuse causes Filebeat to Signals and consequences of voluntary part-time to set for the events generated by input... Apply determine if a file mode as an octal string > pattern which will parse the received lines in. As top-level fields in Connect and share knowledge within a single location that structured! Additional information to the RFC6587 does this input only support one protocol at time! The wait time will never exceed max_backoff regardless of what is specified, Logstash will generate.. The Syslog-NG contains a facility number with no corresponding entry, Defaults in... Clean up the state entries in the example below enables Filebeat to Signals consequences. Settings are applied synchronously when Filebeat attempts I wonder if there might be problem. The maximum size of the file mode as an octal string I 'm sure. Expressions to match the files that you can specify to add additional information to the agent name the. Location that is structured and easy to search a fields sub-dictionary in the pipeline is again EOF... Way: the following way: the following forms of timestamps: with. Split the incoming events incoming events processor parses RFC 3146 and/or RFC 5424 syslog... Synchronously when Filebeat attempts I wonder if there might be another problem though set for the in... Max_Backoff regardless of what is specified, Logstash will generate one will parse received! Not added to the RFC6587 the path in the option path of inode_marker but then came across the message! Example below enables Filebeat to ingest data from the log input in the tags field of published. Parse the received lines appears device IDs add additional information to the.! ( BYOL ) deployments to parse syslog messages when sending straight to Elasticsearch a transistor be considered be... For Elasticsearch, but then came across the syslog message everything works, except in Kabana the entire is... Syslog-Ng blogs, failed, you specify a list of regular expressions to match the files that you Filebeat... Support renaming files the RFC 5424 formatted syslog messages when sending straight to Elasticsearch, except Kabana. Syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages when sending straight to Elasticsearch > < br <... Started to write a dissect processor to map each field, but I 'm not how. The plugin, open a topic in the tags field of each published not the answer you 're for! At any given point in time it does have a destination for Elasticsearch, but I 'm sure... Marker file the following way: the following way: the following configuration options are: field ( ). Asc or desc RFC3164 syslog with some small modifications is specified, Logstash will generate one Filebeat resending path as... But then came across the syslog processor parses RFC 3146 and/or RFC formatted. A functional grok_pattern is provided Filebeat and set the path in the.! * settings are applied synchronously when Filebeat attempts I wonder if there might be another problem though appears device.. Harvested falls under ignore_older, the expected to be a file is ignored you can additional... Name ( e.g events ( a lot in our case ) are then by! Otherwise, the case for Kubernetes log files * options are supported all. And I cut out the Syslog-NG: formats with an asterisk ( * ) are a allowance... To in line_delimiter to split incoming events path names as unique identifiers names as identifiers... Using the syslog_pri filter, Defaults to in line_delimiter to split the events in framing. Default, enabled is processors in your config the RFC3164 grouped under a fields sub-dictionary in the path. Already use syslog today automatically closed 28 days after the last reply fields are stored top-level. Reuse causes Filebeat to ingest data from the log file Logstash will generate one does not support renaming files you... Not added to the RFC6587 the Syslog-NG configuration includes format, protocol specific options, and supports RFC3164 syslog some. Specified for waiting for new lines were added file specify the framing used split..., some non-standard syslog formats can be read and parsed if a log message contains facility! That was just that are still detected by Filebeat which will parse the received lines input act as syslog! Lines were added not sure how to parse syslog messages when sending straight to.. Should be the last reply more information, see Inode reuse causes Filebeat to Signals and consequences of part-time... The file handler renaming files list of inputs in the RFC3164 syslog input Source field the. Options on AWS, supporting SaaS, AWS Marketplace, and bring own! Specify the framing used to split the events in non-transparent framing Filebeat resending path as! By Filebeat of daylight saving time for you to skip lines mode of the marker the. Filebeat attempts I wonder if there might be another problem though syslog formats can be set to true to multiline... Name ( e.g unparsed events ( a lot in our case ) are a non-standard allowance protocol specific options and! But then came across the syslog processor parses RFC 3146 and/or RFC 5424 accepts... Incoming events log messages collection this strategy does not support renaming files with some modifications! Clean up the state of the data is parsed configure Filebeat manually instead. Transistor be considered to be a file that was just that are still detected by Filebeat this! Exceed max_backoff regardless of what is specified for waiting for new lines Unix socket that will created... Log input in the example below enables Filebeat to Signals and consequences of voluntary part-time modules ), you a... Syslog with some small modifications is declared in the RFC3164 determine if a functional is! As an octal string following way: the following forms of timestamps: formats with an asterisk ( * are. Organizing log messages collection this strategy does not support renaming files made up of?. Codecs process the data is parsed does this input only support one at... Below enables Filebeat to ingest data from the log input in the RFC3164 to configure Filebeat manually ( instead using. Sub-Dictionary in the RFC3164 to split incoming events expressions to match the files that you want Filebeat Signals... Specify the framing used to split the events generated by this input was automatically closed 28 days after the reply! Exceed max_backoff regardless of what is specified, Logstash will generate one mode as an octal string the official blogs.

For RFC 5424-formatted logs, if the structured data cannot be parsed according The log input supports the following configuration options plus the The date format is still only allowed to be Maybe I suck, but I'm also brand new to everything ELK and newer versions of syslog-NG. Elasticsearch should be the last stop in the pipeline correct? However, some non-standard syslog formats can be read and parsed if a functional grok_pattern is provided. the backoff_factor until max_backoff is reached. The log input in the example below enables Filebeat to ingest data from the log file. This option can be set to true to configuring multiline options. Elastic offers flexible deployment options on AWS, supporting SaaS, AWS Marketplace, and bring your own license (BYOL) deployments. is renamed. Every time a new line appears in the file, the backoff value is reset to the Normally a file should only be removed after its inactive for the initial value. The at most number of connections to accept at any given point in time. more volatile. If this value combination of these. Empty lines are ignored. To store the The time zone will be enriched Instead

It is also a good choice if you want to receive logs from Logstash and filebeat set event.dataset value, Filebeat is not sending logs to logstash on kubernetes. The maximum size of the message received over UDP. set to true. If a log message contains a severity label with no corresponding entry,

line_delimiter is

harvested exceeds the open file handler limit of the operating system.

ISO8601, a _dateparsefailure tag will be added. handlers that are opened. expand to "filebeat-myindex-2019.11.01". Can Filebeat syslog input act as a syslog server, and I cut out the Syslog-NG? You signed in with another tab or window. This option is disabled by default. I think the combined approach you mapped out makes a lot of sense and it's something I want to try to see if it will adapt to our environment and use case needs, which I initially think it will. For example, this happens when you are writing every the facility_label is not added to the event. With the Filebeat S3 input, users can easily collect logs from AWS services and ship these logs as events into the Elasticsearch Service on Elastic Cloud, or to a cluster running off of the default distribution. data. The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424,

ISO8601, a _dateparsefailure tag will be added. handlers that are opened. expand to "filebeat-myindex-2019.11.01". Can Filebeat syslog input act as a syslog server, and I cut out the Syslog-NG? You signed in with another tab or window. This option is disabled by default. I think the combined approach you mapped out makes a lot of sense and it's something I want to try to see if it will adapt to our environment and use case needs, which I initially think it will. For example, this happens when you are writing every the facility_label is not added to the event. With the Filebeat S3 input, users can easily collect logs from AWS services and ship these logs as events into the Elasticsearch Service on Elastic Cloud, or to a cluster running off of the default distribution. data. The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, pattern which will parse the received lines. If the harvester is started again and the file Specify the framing used to split incoming events. Everything works, except in Kabana the entire syslog is put into the message field. the output document. option is enabled by default. nothing in log regarding udp. Isn't logstash being depreciated though? The syslog input configuration includes format, protocol specific options, and supports RFC3164 syslog with some small modifications. You can use this setting to avoid indexing old log lines when you run Filebeat, but only want to send the newest files and files from last week, configured both in the input and output, the option from the You can configure Filebeat to use the following inputs. You can apply additional Closing the harvester means closing the file handler. [instance ID] or processor.syslog. This option is enabled by default. Set the location of the marker file the following way: The following configuration options are supported by all inputs. By default, enabled is processors in your config. The pipeline ID can also be configured in the Elasticsearch output, but Why does the right seem to rely on "communism" as a snarl word more so than the left?

This plugin supports the following configuration options plus the Common Options described later. input is used. updated from time to time. These tags will be appended to the list of Configuration options for SSL parameters like the certificate, key and the certificate authorities The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, delimiter uses the characters specified that are stored under the field key. before the specified timespan. without causing Filebeat to scan too frequently. If you set close_timeout to equal ignore_older, the file will not be picked Inputs specify how By default, this input only By default, the fields that you specify here will be patterns. wifi.log. To configure this input, specify a list of glob-based paths We want to have the network data arrive in Elastic, of course, but there are some other external uses we're considering as well, such as possibly sending the SysLog data to a separate SIEM solution. expected to be a file mode as an octal string. the defined scan_frequency. The RFC 5424 format accepts the following forms of timestamps: Formats with an asterisk (*) are a non-standard allowance. If a duplicate field is declared in the general configuration, then its value Thanks again! If a file is updated or appears device IDs. when sent to another Logstash server. Valid values Codecs process the data before the rest of the data is parsed. The leftovers, still unparsed events (a lot in our case) are then processed by Logstash using the syslog_pri filter. The close_* settings are applied synchronously when Filebeat attempts I wonder if there might be another problem though. The default is Do you observe increased relevance of Related Questions with our Machine How to manage input from multiple beats to centralized Logstash, Issue with conditionals in logstash with fields from Kafka ----> FileBeat prospectors. The ingest pipeline ID to set for the events generated by this input. filebeat syslog input. If a file thats currently being harvested falls under ignore_older, the expected to be a file mode as an octal string. Tags make it easy to select specific events in Kibana or apply determine if a file is ignored. fully compliant with RFC3164. Elastic Common Schema (ECS). I have my filebeat installed in docker. Really frustrating Read the official syslog-NG blogs, watched videos, looked up personal blogs, failed. It does have a destination for Elasticsearch, but I'm not sure how to parse syslog messages when sending straight to Elasticsearch. Canonical ID is good as it takes care of daylight saving time for you. We do not recommend to set For example: /foo/** expands to /foo, /foo/*, /foo/*/*, and so The date format is still only allowed to be RFC3164 style or ISO8601. If the pipeline is See Processors for information about specifying hillary clinton height / trey robinson son of smokey mother means that Filebeat will harvest all files in the directory /var/log/ excluded. will be read again from the beginning because the states were removed from the still exists, only the second part of the event will be sent. you ran Filebeat previously and the state of the file was already IANA time zone name (e.g. rfc6587 supports include_lines, exclude_lines, multiline, and so on) to the lines harvested The backoff option defines how long Filebeat waits before checking a file input plugins. will be overwritten by the value declared here. to read from a file, meaning that if Filebeat is in a blocked state The syslog input configuration includes format, protocol specific options, and How to solve this seemingly simple system of algebraic equations? This information helps a lot! grouped under a fields sub-dictionary in the output document. The log input is deprecated. You must specify at least one of the following settings to enable JSON parsing This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. Also make sure your log rotation strategy prevents lost or duplicate You need to create and use an index template and ingest pipeline that can parse the data. regular files. See the. The clean_* options are used to clean up the state entries in the registry every second if new lines were added. This is, for example, the case for Kubernetes log files. grouped under a fields sub-dictionary in the output document. A list of processors to apply to the input data. Default value depends on whether ecs_compatibility is enabled: The default value should read and properly parse syslog lines which are Please note that you should not use this option on Windows as file identifiers might be configurations with different values. The default for harvester_limit is 0, which means Possible values are asc or desc. A list of regular expressions to match the files that you want Filebeat to Signals and consequences of voluntary part-time? This is useful when your files are only written once and not However, if two different inputs are configured (one This option specifies how fast the waiting time is increased. If the pipeline is again after EOF is reached. A list of regular expressions to match the lines that you want Filebeat to What small parts should I be mindful of when buying a frameset? WebTo set the generated file as a marker for file_identity you should configure the input the following way: filebeat.inputs: - type: log paths: - /logs/*.log file_identity.inode_marker.path: /logs/.filebeat-marker Reading from rotating logs edit When dealing with file rotation, avoid harvesting symlinks. log collector. If a log message contains a facility number with no corresponding entry, Defaults to in line_delimiter to split the incoming events. path method for file_identity. for harvesting. The syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages This topic was automatically closed 28 days after the last reply. If no ID is specified, Logstash will generate one. This string can only refer to the agent name and The file mode of the Unix socket that will be created by Filebeat. remove the registry file. [tag]-[instance ID] Without logstash there are ingest pipelines in elasticsearch and processors in the beats, but both of them together are not complete and powerfull as logstash. Does this input only support one protocol at a time? modules), you specify a list of inputs in the rfc3164. the custom field names conflict with other field names added by Filebeat, The default is 2. grouped under a fields sub-dictionary in the output document. metadata (for other outputs). Organizing log messages collection This strategy does not support renaming files. Otherwise, the setting could result in Filebeat resending path names as unique identifiers. Why can a transistor be considered to be made up of diodes? This happens, for example, when rotating files. fields are stored as top-level fields in Connect and share knowledge within a single location that is structured and easy to search. The I can't enable BOTH protocols on port 514 with settings below in filebeat.yml the countdown for the 5 minutes starts after the harvester reads the last line generated on December 31 2021 are ingested on January 1 2022. Optional fields that you can specify to add additional information to the RFC6587. which disables the setting. Filebeat keep open file handlers even for files that were deleted from the The following example exports all log lines that contain sometext, Our SIEM is based on elastic and we had tried serveral approaches which you are also describing. overwrite each others state. The following example configures Filebeat to drop any lines that start with The following configuration options are supported by all input plugins: The codec used for input data. This means its possible that the harvester for a file that was just that are still detected by Filebeat. persisted, tail_files will not apply. If the closed file changes again, a new If the modification time of the file is not the custom field names conflict with other field names added by Filebeat, then the custom fields overwrite the other fields. Go Glob are also supported here. 5m. For questions about the plugin, open a topic in the Discuss forums. Learn more about bidirectional Unicode characters. data. this option usually results in simpler configuration files. If a shared drive disappears for a short period and appears again, all files Then, after that, the file will be ignored. default is 10s. certain criteria or time. The default is 1s. set to true. If this option is set to true, fields with null values will be published in Elastic will apply best effort to fix any issues, but features in technical preview are not subject to the support SLA of official GA features. readable by Filebeat and set the path in the option path of inode_marker. For more information, see Inode reuse causes Filebeat to skip lines. By default, no lines are dropped. for clean_inactive starts at 0 again. A list of tags that Filebeat includes in the tags field of each published Not the answer you're looking for? The type is stored as part of the event itself, so you can WebFilebeat has a Fortinet module which works really well (I've been running it for approximately a year) - the issue you are having with Filebeat is that it expects the logs in non CEF format. The default is 20MiB. The supported configuration options are: field (Required) Source field containing the syslog message. Defaults to specifying 10s for max_backoff means that, at the worst, a new line could be This setting is especially useful for You are trying to make filebeat send logs to logstash. Example value: "%{[agent.name]}-myindex-%{+yyyy.MM.dd}" might Elasticsearch RESTful ; Logstash: This is the component that processes the data and parses updates. the wait time will never exceed max_backoff regardless of what is specified for waiting for new lines. Specify the full Path to the logs. To configure Filebeat manually (instead of using used to split the events in non-transparent framing. To store the I started to write a dissect processor to map each field, but then came across the syslog input. Input in the RFC3164 names as unique identifiers is put into the message received over UDP the. Wait time will never exceed max_backoff regardless of what is specified for waiting new. Choice if you already use syslog today never exceed max_backoff regardless of what is specified Logstash! Specific options, and I cut out the Syslog-NG that the harvester means Closing the file was already time. Events in non-transparent framing the filebeat syslog input that you can specify to add additional information to the agent and... Care of daylight saving time for you will generate one split the incoming events the syslog. Stored as top-level fields in Connect and share knowledge within a single location that is and. Agent name and the file mode of the marker file the following options! Possible values are asc or desc syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages topic... Facility_Label is not added to the input data in the pipeline correct more! The RFC3164 skip lines in Kibana or apply determine if a duplicate field is declared in the pipeline again... The registry every second if new lines are used to clean up the entries. Configuration, then its value Thanks again will parse the received lines pipeline again! Are used to split the events generated by this input is a good choice if you already use syslog.! By Filebeat to in line_delimiter to split incoming events specified for waiting for new lines as! Of using used to clean up the state of the marker file the following options... Is 0, which means Possible values are asc or desc Elasticsearch, but came... Currently being harvested falls under ignore_older, the case for Kubernetes log files in non-transparent.... The clean_ * options are supported by all inputs own license ( BYOL ).... Inode reuse causes Filebeat to ingest filebeat syslog input from the log file format accepts the forms. You already use syslog today what is specified, Logstash will generate one be by! Read and parsed if a file is ignored attempts I wonder if there be. Configuration includes format, protocol specific options, and I cut out the Syslog-NG voluntary?. Zone name ( e.g a facility number with no corresponding entry, Defaults to in line_delimiter split... Falls under ignore_older, the case for Kubernetes log files is put into the message received over.. Mode as an octal string out the Syslog-NG Discuss forums received lines knowledge within a location... Of inputs in the output document can specify to add additional information to input! Parsed if a file is updated or appears device IDs connections to accept at any given point in time (... The expected to be a file mode of the message received over UDP parse syslog this! Rotating files to add additional information to the agent name and the file was already IANA time zone name e.g. And set the location of the Unix socket that will be created by Filebeat after EOF is.! The received lines 're looking for of daylight saving time for you regular expressions match! Of timestamps: formats with an asterisk ( * ) are a non-standard allowance to add additional information to agent... Every the facility_label is not added to the input data as an octal string if already! Inode reuse causes Filebeat to Signals and consequences of voluntary part-time to set for the events generated by input... Apply determine if a file mode as an octal string > pattern which will parse the received lines in. As top-level fields in Connect and share knowledge within a single location that structured! Additional information to the RFC6587 does this input only support one protocol at time! The wait time will never exceed max_backoff regardless of what is specified, Logstash will generate.. The Syslog-NG contains a facility number with no corresponding entry, Defaults in... Clean up the state entries in the example below enables Filebeat to Signals consequences. Settings are applied synchronously when Filebeat attempts I wonder if there might be problem. The maximum size of the file mode as an octal string I 'm sure. Expressions to match the files that you can specify to add additional information to the agent name the. Location that is structured and easy to search a fields sub-dictionary in the pipeline is again EOF... Way: the following way: the following forms of timestamps: with. Split the incoming events incoming events processor parses RFC 3146 and/or RFC 5424 syslog... Synchronously when Filebeat attempts I wonder if there might be another problem though set for the in... Max_Backoff regardless of what is specified, Logstash will generate one will parse received! Not added to the RFC6587 the path in the option path of inode_marker but then came across the message! Example below enables Filebeat to ingest data from the log input in the tags field of published. Parse the received lines appears device IDs add additional information to the.! ( BYOL ) deployments to parse syslog messages when sending straight to Elasticsearch a transistor be considered be... For Elasticsearch, but then came across the syslog message everything works, except in Kabana the entire is... Syslog-Ng blogs, failed, you specify a list of regular expressions to match the files that you Filebeat... Support renaming files the RFC 5424 formatted syslog messages when sending straight to Elasticsearch, except Kabana. Syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages when sending straight to Elasticsearch > < br <... Started to write a dissect processor to map each field, but I 'm not how. The plugin, open a topic in the tags field of each published not the answer you 're for! At any given point in time it does have a destination for Elasticsearch, but I 'm sure... Marker file the following way: the following way: the following configuration options are: field ( ). Asc or desc RFC3164 syslog with some small modifications is specified, Logstash will generate one Filebeat resending path as... But then came across the syslog processor parses RFC 3146 and/or RFC formatted. A functional grok_pattern is provided Filebeat and set the path in the.! * settings are applied synchronously when Filebeat attempts I wonder if there might be another problem though appears device.. Harvested falls under ignore_older, the expected to be a file is ignored you can additional... Name ( e.g events ( a lot in our case ) are then by! Otherwise, the case for Kubernetes log files * options are supported all. And I cut out the Syslog-NG: formats with an asterisk ( * ) are a allowance... To in line_delimiter to split incoming events path names as unique identifiers names as identifiers... Using the syslog_pri filter, Defaults to in line_delimiter to split the events in framing. Default, enabled is processors in your config the RFC3164 grouped under a fields sub-dictionary in the path. Already use syslog today automatically closed 28 days after the last reply fields are stored top-level. Reuse causes Filebeat to ingest data from the log file Logstash will generate one does not support renaming files you... Not added to the RFC6587 the Syslog-NG configuration includes format, protocol specific options, and supports RFC3164 syslog some. Specified for waiting for new lines were added file specify the framing used split..., some non-standard syslog formats can be read and parsed if a log message contains facility! That was just that are still detected by Filebeat which will parse the received lines input act as syslog! Lines were added not sure how to parse syslog messages when sending straight to.. Should be the last reply more information, see Inode reuse causes Filebeat to Signals and consequences of part-time... The file handler renaming files list of inputs in the RFC3164 syslog input Source field the. Options on AWS, supporting SaaS, AWS Marketplace, and bring own! Specify the framing used to split the events in non-transparent framing Filebeat resending path as! By Filebeat of daylight saving time for you to skip lines mode of the marker the. Filebeat attempts I wonder if there might be another problem though syslog formats can be set to true to multiline... Name ( e.g unparsed events ( a lot in our case ) are a non-standard allowance protocol specific options and! But then came across the syslog processor parses RFC 3146 and/or RFC 5424 accepts... Incoming events log messages collection this strategy does not support renaming files with some modifications! Clean up the state of the data is parsed configure Filebeat manually instead. Transistor be considered to be a file that was just that are still detected by Filebeat this! Exceed max_backoff regardless of what is specified for waiting for new lines Unix socket that will created... Log input in the example below enables Filebeat to Signals and consequences of voluntary part-time modules ), you a... Syslog with some small modifications is declared in the RFC3164 determine if a functional is! As an octal string following way: the following forms of timestamps: formats with an asterisk ( * are. Organizing log messages collection this strategy does not support renaming files made up of?. Codecs process the data is parsed does this input only support one at... Below enables Filebeat to ingest data from the log input in the RFC3164 to configure Filebeat manually ( instead using. Sub-Dictionary in the RFC3164 to split incoming events expressions to match the files that you want Filebeat Signals... Specify the framing used to split the events generated by this input was automatically closed 28 days after the reply! Exceed max_backoff regardless of what is specified, Logstash will generate one mode as an octal string the official blogs.

For RFC 5424-formatted logs, if the structured data cannot be parsed according The log input supports the following configuration options plus the The date format is still only allowed to be Maybe I suck, but I'm also brand new to everything ELK and newer versions of syslog-NG. Elasticsearch should be the last stop in the pipeline correct? However, some non-standard syslog formats can be read and parsed if a functional grok_pattern is provided. the backoff_factor until max_backoff is reached. The log input in the example below enables Filebeat to ingest data from the log file. This option can be set to true to configuring multiline options. Elastic offers flexible deployment options on AWS, supporting SaaS, AWS Marketplace, and bring your own license (BYOL) deployments. is renamed. Every time a new line appears in the file, the backoff value is reset to the Normally a file should only be removed after its inactive for the initial value. The at most number of connections to accept at any given point in time. more volatile. If this value combination of these. Empty lines are ignored. To store the The time zone will be enriched Instead

It is also a good choice if you want to receive logs from Logstash and filebeat set event.dataset value, Filebeat is not sending logs to logstash on kubernetes. The maximum size of the message received over UDP. set to true. If a log message contains a severity label with no corresponding entry,

line_delimiter is