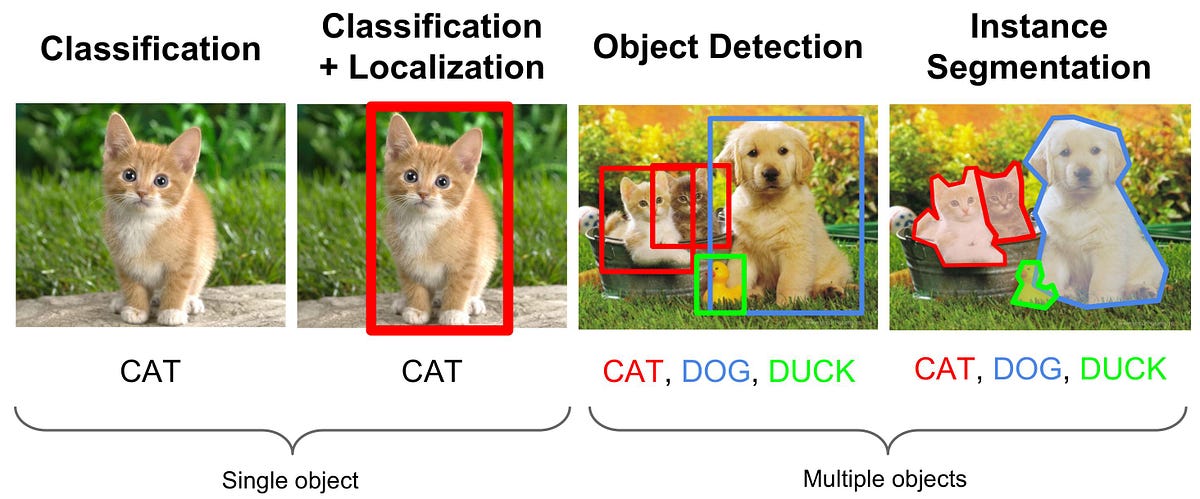

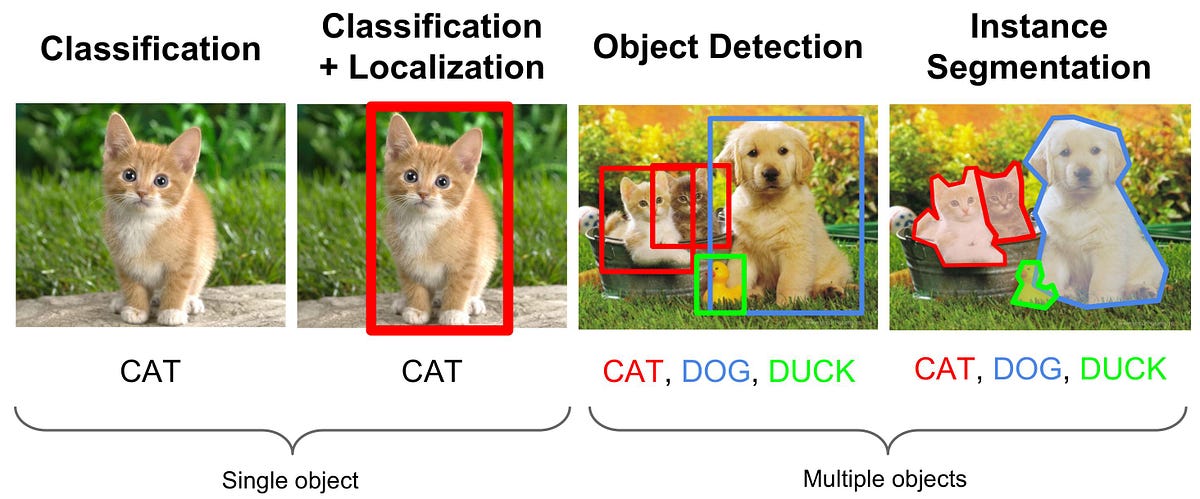

Many data sets are publicly available [8386], nurturing a continuous progress in this field. Without cell propagation, that number goes up to 300k, without Doppler scaling up to 375k, and 400k training iterations without both preprocessing steps. 4DRT-based object detection baseline neural networks (baseline NNs) and show In the past two years, a number of review papers [ 3, 4, 5, 6, 7, 8] have been published in this field. WebObject detection. Method execution speed (ms) vs. accuracy (mAP) at IOU=0.5. While both methods have a small but positive impact on the detection performance, the networks converge notably faster: The best regular YOLOv3 model is found at 275k iterations. All results can be found in Table3. On the left, the three different ground truth definitions used for different trainings are illustrated: point-wise, an axis-aligned, and a rotated box. Instead of just replacing the filter, an LSTM network is used to classify clusters originating from the PointNet++ + DBSCAN approach. Despite, being only the second best method, the modular approach offers a variety of advantages over the YOLO end-to-end architecture. https://doi.org/10.1109/ACCESS.2020.3032034. Gao X, Xing G, Roy S, Liu H (2021) RAMP-CNN : A Novel Neural Network for Enhanced Automotive Radar Object Recognition. [6, 13, 21, 22]. https://doi.org/10.5555/646296.687872. Datasets CRUW With MATLAB and Simulink , you can: Label signals collected from Shi S, Guo C, Jiang L, Wang Z, Shi J, Wang X, Li H (2020) Pv-rcnn: Point-voxel feature set abstraction for 3d object detection In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1052610535, Seattle. https://doi.org/10.1109/ICRA40945.2020.9196884. Therefore, only five different-sized anchor boxes are estimated.  As mentioned above, further experiments with rotated bounding boxes are carried out for YOLO and PointPillars. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida ACM Trans Graph 37(4):112. Scheiner N, Appenrodt N, Dickmann J, Sick B (2019) A Multi-Stage Clustering Framework for Automotive Radar Data In: IEEE 22nd Intelligent Transportation Systems Conference (ITSC), 20602067.. IEEE, Auckland. As there is no Many deep learning models based on convolutional neural network (CNN) are proposed for the detection and classification of objects in satellite images. 5. However, most of the available convolutional neural networks WebThe radar object detection (ROD) task aims to classify and localize the objects in 3D purely from radar's radio frequency (RF) images. Deep learning methods for object detection in computer vision can be used for target detection in range-Doppler images [6], [7], [8]. In order to make an optimal decision about these open questions, large data sets with different data levels and sensor modalities are required. Scheiner N, Appenrodt N, Dickmann J, Sick B (2018) Radar-based Feature Design and Multiclass Classification for Road User Recognition In: 2018 IEEE Intelligent Vehicles Symposium (IV), 779786.. IEEE, Changshu. While end-to-end architectures advertise their capability to enable the network to learn all peculiarities within a data set, modular approaches enable the developers to easily adapt and enhance individual components. Overall impression }\Delta _{v_{r}} = {0.1}\text {km s}^{-1}\), $$ {}\tilde{v}_{r} = v_{r} - \left(\begin{array}{c} v_{\text{ego}} + m_{y} \cdot \dot{\phi}_{\text{ego}}\\ \!\!\!\! The so achieved point cloud reduction results in a major speed improvement. WebObject detection. }\boldsymbol {\eta }_{\mathbf {v}_{\mathbf {r}}}\), $$\begin{array}{*{20}l} \sqrt{\Delta x^{2} + \Delta y^{2} + \epsilon^{-2}_{v_{r}}\cdot\Delta v_{r}^{2}} < \epsilon_{xyv_{r}} \:\wedge\: \Delta t < \epsilon_{t}. $$, $$ \text{mAP} = \frac{1}{\tilde{K}} \sum_{\tilde{K}} \text{AP}, $$, $$ {}\text{LAMR} = \exp\!\left(\!\frac{1}{9} \sum_{f} \log\!

As mentioned above, further experiments with rotated bounding boxes are carried out for YOLO and PointPillars. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida ACM Trans Graph 37(4):112. Scheiner N, Appenrodt N, Dickmann J, Sick B (2019) A Multi-Stage Clustering Framework for Automotive Radar Data In: IEEE 22nd Intelligent Transportation Systems Conference (ITSC), 20602067.. IEEE, Auckland. As there is no Many deep learning models based on convolutional neural network (CNN) are proposed for the detection and classification of objects in satellite images. 5. However, most of the available convolutional neural networks WebThe radar object detection (ROD) task aims to classify and localize the objects in 3D purely from radar's radio frequency (RF) images. Deep learning methods for object detection in computer vision can be used for target detection in range-Doppler images [6], [7], [8]. In order to make an optimal decision about these open questions, large data sets with different data levels and sensor modalities are required. Scheiner N, Appenrodt N, Dickmann J, Sick B (2018) Radar-based Feature Design and Multiclass Classification for Road User Recognition In: 2018 IEEE Intelligent Vehicles Symposium (IV), 779786.. IEEE, Changshu. While end-to-end architectures advertise their capability to enable the network to learn all peculiarities within a data set, modular approaches enable the developers to easily adapt and enhance individual components. Overall impression }\Delta _{v_{r}} = {0.1}\text {km s}^{-1}\), $$ {}\tilde{v}_{r} = v_{r} - \left(\begin{array}{c} v_{\text{ego}} + m_{y} \cdot \dot{\phi}_{\text{ego}}\\ \!\!\!\! The so achieved point cloud reduction results in a major speed improvement. WebObject detection. }\boldsymbol {\eta }_{\mathbf {v}_{\mathbf {r}}}\), $$\begin{array}{*{20}l} \sqrt{\Delta x^{2} + \Delta y^{2} + \epsilon^{-2}_{v_{r}}\cdot\Delta v_{r}^{2}} < \epsilon_{xyv_{r}} \:\wedge\: \Delta t < \epsilon_{t}. $$, $$ \text{mAP} = \frac{1}{\tilde{K}} \sum_{\tilde{K}} \text{AP}, $$, $$ {}\text{LAMR} = \exp\!\left(\!\frac{1}{9} \sum_{f} \log\!  Deep learning has been applied in many object detection use cases. In this paper, we introduce a deep learning approach to 3D object detection with radar only. elevation information, it is challenging to estimate the 3D bounding box of an This results in a total of ten anchors and requires considerably less computational effort compared to rotating, i.e. $$, $$ {}\mathcal{L} \!= \! 0.16 m, did deteriorate the results. Scheiner N, Appenrodt N, Dickmann J, Sick B (2019) Radar-based Road User Classification and Novelty Detection with Recurrent Neural Network Ensembles In: IEEE Intelligent Vehicles Symposium (IV), 642649.. IEEE, Paris. In order to mitigate these shortcomings, an improved CNN backbone was used, boosting the model performance to 45.82%, outperforming the pure PointNet++ object detection approach, but still being no match for the better variants. As stated in Clustering and recurrent neural network classifier section, the DBSCAN parameter Nmin is replaced by a range-dependent variant. Barnes D, Gadd M, Murcutt P, Newman P, Posner I (2020) The oxford radar robotcar dataset: A radar extension to the oxford robotcar dataset In: 2020 IEEE International Conference on Robotics and Automation (ICRA), 64336438, Paris. \end{array} $$, \(\phantom {\dot {i}\! Article Help compare methods by submitting evaluation metrics . In: 23rd International Conference on Information Fusion (FUSION), Rustenburg. For each class, only detections exceeding a predefined confidence level c are displayed. We describe the complete process of generating such a dataset, highlight some main features of the corresponding high-resolution radar and demonstrate its The question of the optimum data level is directly associated with the choice of a data fusion approach, i.e., at what level will data from multiple radar sensor be fused with each other and, also, with other sensor modalities, e.g., video or lidar data. Instead of evaluating the detectors precision, it examines its sensitivity. Tilly JF, Haag S, Schumann O, Weishaupt F, Duraisamy B, Dickmann J, Fritzsche M (2020) Detection and tracking on automotive radar data with deep learning In: 23rd International Conference on Information Fusion (FUSION), Rustenburg. https://doi.org/10.1109/TGRS.2020.3019915. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida A deep reinforcement learning approach, which uses the authors' own developed neural network, is presented for object detection on the PASCAL Voc2012 dataset, and the test results were compared with the results of previous similar studies. We present a survey on marine object detection based on deep neural network approaches, which are state-of-the-art approaches for the development of autonomous ship navigation, maritime surveillance, shipping management, and other intelligent transportation system applications in the future. Built on our recent proposed 3DRIMR (3D Reconstruction and Imaging via mmWave Radar), we introduce in this paper DeepPoint, a deep learning model that generates 3D objects in point cloud format that significantly outperforms the original 3DRIMR design. Object Detection using OpenCV and Deep Learning. Yi L, Kim VG, Ceylan D, Shen I-C, Yan M, Su H, Lu C, Huang Q, Sheffer A, Guibas L (2016) A Scalable Active Framework for Region Annotation in 3D Shape Collections. Detection System, 2D Car Detection in Radar Data with PointNets, Enhanced K-Radar: Optimal Density Reduction to Improve Detection https://doi.org/10.23919/IRS.2018.8447897. Object detection and semantic segmentation are two of the most widely ad A new automotive radar data set with measurements and point-wise annotat Probabilistic Orientated Object Detection in Automotive Radar, Scene-aware Learning Network for Radar Object Detection, T-FFTRadNet: Object Detection with Swin Vision Transformers from Raw ADC Especially the dynamic object detector would get additional information about what radar points are most likely parts of the surroundings and not a slowly crossing car for example. https://doi.org/10.5281/zenodo.1474353. We also provide Pegoraro J, Meneghello F, Rossi M (2020) Multi-Person Continuous Tracking and Identification from mm-Wave micro-Doppler Signatures. Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. Detecting harmful carried objects plays a key role in intelligent surveillance systems and has widespread applications, for example, in airport security. https://doi.org/10.1109/CVPR42600.2020.01189. While this behavior may look superior to the YOLOv3 method, in fact, YOLO produces the most stable predictions, despite having little more false positives than the LSTM for the four examined scenarios. Braun M, Krebs S, Flohr FB, Gavrila DM (2019) The EuroCity Persons Dataset: A Novel Benchmark for Object Detection. first ones to demonstrate a deep learning-based 3D object detection model with In turn, this reduces the total number of required hyperparameter optimization steps. For the LSTM method, an additional variant uses the posterior probabilities of the OVO classifier of the chosen class and the background class as confidence level. Moreover, most of the existing Radar datasets only provide 3D Radar tensor (3DRT) data that contain power measurements along the Doppler, range, and azimuth dimensions. However, even with this conceptually very simple approach, 49.20% (43.29% for random forest) mAP at IOU=0.5 is achieved. Defining such an operator enables network architectures conceptually similar to those found in CNNs. Unfortunately, existing Radar datasets only contain a IOU example of a single ground truth pedestrian surrounded by noise. However, research has found only recently to apply deep Object detection is essential to safe autonomous or assisted driving. Qi CR, Litany O, He K, Guibas LJ (2019) Deep Hough Voting for 3D Object Detection in Point Clouds In: IEEE International Converence on Computer Vision (ICCV).. IEEE, Seoul. Even though many existing 3D object detection algorithms rely mostly on camera and LiDAR, camera and LiDAR are prone to be affected by harsh weather and lighting conditions. Non-matched ground truth instances count as false negatives (FN) and everything else as true negatives (TN). Overall, the YOLOv3 architecture performs the best with a mAP of 53.96% on the test set. A series of further interesting model combinations was examined. The noise and the elongated object shape have the effect, that even for slight prediction variations from the ground truth, the IOU drops noticeable. Wu W, Qi Z, Fuxin L (2019) PointConv: Deep Convolutional Networks on 3D Point Clouds In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 96139622.. IEEE, Long Beach. https://doi.org/10.1109/TIV.2019.2955853. adverse weathers. Dreher M, Ercelik E, Bnziger T, Knoll A (2020) Radar-based 2D Car Detection Using Deep Neural Networks In: IEEE 23rd Intelligent Transportation Systems Conference (ITSC), 33153322.. IEEE, Rhodes. Webof the single object and multiple objects, and could realize the accurate and efficient detection of the GPR buried objects. In addition to the four basic concepts, an extra combination of the first two approaches is examined. The main focus is set to deep end-to-end models for point cloud data. Unlike RGB cameras that use visible light bands (384769 THz) and Lidar NS wrote the manuscript and designed the majority of the experiments. \left(\! The dataset is having 712 subjects in which 80% used for training and 20% used for testing. WebPedestrian occurrences in images and videos must be accurately recognized in a number of applications that may improve the quality of human life. For object class k the maximum F1 score is: Again the macro-averaged F1 score F1,obj according to Eq. It is inspired by Distant object detection with camera and radar. On the other hand, radar is resistant to such conditions. IEEE Trans Intell Veh 5(2). Moreover, both the DBSCAN and the LSTM network are already equipped with all necessary parts in order to make use of additional time frames and most likely benefit if presented with longer time sequences. The radar data is repeated in several rows. https://doi.org/10.1109/ICIP.2019.8803392. https://doi.org/10.1109/IVS.2012.6232167. The model navigates landmark images for navigating detection of objects by calculating orientation and position of the target image. Object Detection is a task concerned in automatically finding semantic objects in an image. data by transforming it into radar-like point cloud data and aggressive radar Surely, this can be counteracted by choosing smaller grid cell sizes, however, at the cost of larger networks. Caesar H, Bankiti V, Lang AH, Vora S, Liong VE, Xu Q, Krishnan A, Pan Y, Baldan G, Beijbom O (2020) nuscenes: A multimodal dataset for autonomous driving In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1161811628, Seattle. Motivated by this deep learning Clipping the range at 25m and 125m prevents extreme values, i.e., unnecessarily high numbers at short distances or non-robust low thresholds at large ranges. network, we demonstrate that 4D Radar is a more robust sensor for adverse According to the rest of the article, all object detection approaches are abbreviated by the name of their main component. Therefore, only the largest cluster (DBSCAN) or group of points with same object label (PointNet++) are kept within a predicted bounding box. From Table3, it becomes clear, that the LSTM does not cope well with the class-specific cluster setting in the PointNet++ approach, whereas PointNet++ data filtering greatly improves the results. Those point convolution networks are more closely related to conventional CNNs. 1. Convolutional Network, A Robust Illumination-Invariant Camera System for Agricultural The image features Cordts M, Omran M, Ramos S, Rehfeld T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B (2016) The Cityscapes Dataset for Semantic Urban Scene Understanding In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 32133223.. IEEE, Las Vegas. IEEE Transactions on Geoscience and Remote Sensing. Current high resolution Doppler radar data sets are not sufficiently large and diverse to allow for a representative assessment of various deep neural network architectures. However, with 36.89% mAP it is still far worse than other methods. It can be expected that high resolution sensors which are the current state of the art for many research projects, will eventually make it into series production vehicles. Sensors 20(24). Using the notation from [55], LAMR is expressed as: where \(f \in \{10^{-2},10^{-1.75},\dots,10^{0}\}\) denotes 9 equally logarithmically spaced FPPI reference points. m_{x} \cdot \dot{\phi}_{\text{ego}} \end{array}\right)^{\!\!T} \!\!\!\!\ \cdot \left(\begin{array}{c} \cos(\phi+m_{\phi})\\ \sin(\phi+m_{\phi}) \end{array}\right)\!. WebSimulate radar signals to train machine and deep learning models for target and signal classification. 4D Radar tensor (4DRT) data with power measurements along the Doppler, range, The order in which detections are matched is defined by the objectness or confidence score c that is attached to every object detection output. relatively small number of samples compared to the existing camera and Lidar Also for both IOU levels, it performs best among all methods in terms of AP for pedestrians. For the LSTM method with PointNet++ Clustering two variants are examined. Over all scenarios, the tendency can be observed, that PointNet++ and PointPillars tend to produce too much false positive predictions, while the LSTM approach goes in the opposite direction and rather leaves out some predictions. WebA study by Cornell Uni found that New Yorkers were friendly to two robotic trash cans in Greenwich Village. With the rapid development of deep learning techniques, deep convolutional neural networks (DCNNs) have become more important for object detection. Finding the best way to represent objects in radar data could be the key to unlock the next leap in performance. All codes are available at As for AP, the LAMR is calculated for each class first and then macro-averaged. Ground truth and predicted classes are color-coded. Amplitude normalization helps the CNN converge faster. Benchmarks Add a Result These leaderboards are used to track progress in Radar Object Detection No evaluation results yet. When distinct portions of an object move in front of a radar, micro-Doppler signals are produced that may be utilized to identify the object. https://doi.org/10.1109/TPAMI.2016.2577031. https://doi.org/10.5281/zenodo.4559821. Atzmon M, Maron H, Lipman Y (2018) Point convolutional neural networks by extension operators. September 09, 2021. 12 is reported. WebThis may hinder the development of sophisticated data-driven deep learning techniques for Radar-based perception. IEEE Robot Autom Lett PP. Images consist of a regular 2D grid which facilitates processing with convolutions. Since the notion of distance still applies to point clouds, a lot of research is focused on processing neighborhoods with a local aggregation operator. Apparently, YOLO manages to better preserve the sometimes large extents of this class than other methods. A possible reason is that many objects appear in the radar data as elongated shapes. https://doi.org/10.1007/978-3-319-46448-0. https://doi.org/10.1109/CVPR.2014.81. Privacy augmentation techniques. Terms and Conditions, Thomas H, Qi CR, Deschaud J-E, Marcotegui B, Goulette F, Guibas L (2019) KPConv: Flexible and Deformable Convolution for Point Clouds In: IEEE/CVF International Conference on Computer Vision (ICCV), 64106419.. IEEE, Seoul. Object detection comprises two parts: image classification and then image localization. WebRadar returns originate from different objects and different parts of objects. However, it can also be presumed, that with constant model development, e.g., by choosing high-performance feature extraction stages, those kind of architectures will at least reach the performance level of image-based variants. https://doi.org/10.1109/CVPR.2019.01298. https://doi.org/10.1109/LRA.2020.2967272. This can easily be adopted to radar point clouds by calculating the intersection and union based on radar points instead of pixels [18, 47]: An object instance is defined as matched if a prediction has an IOU greater or equal than some threshold. However, results suggest that the LSTM network does not cope well with the so found clusters. While this variant does not actually resemble an object detection metric, it is quite intuitive to understand and gives a good overview about how well the inherent semantic segmentation process was executed. Stronger returns tend to obscure weaker ones. This supports the claim, that these processing steps are a good addition to the network. PointPillars Finally, the PointPillars approach in its original form is by far the worst among all models (36.89% mAP at IOU=0.5). Ester M, Kriegel H-P, Sander J, Xu X (1996) A Density-based Algorithm for Discovering Clusters in Large Spatial Databases with Noise In: 1996 2nd International Conference on Knowledge Discovery and Data Mining (KDD), 226231.. AAAI Press, Portland. Wu Z, Song S, Khosla A, Yu F, Zhang L, Tang X, Xiao J (2015) 3D ShapeNets: A Deep Representation for Volumetric Shapes In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR).. IEEE, Boston. Conclusions Epoch Recall AP0.5 The proposed method 60 95.4% 86.7% The proposed method 80 96.7% 88.2% The experiment is implemented on the deep learning toolbox using the training data and the Cascade CNN model. Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-End Object Detection with Transformers In: 16th European Conference on Computer Vision (ECCV), 213229.. Springer, Glasgow. All authors read and approved the final manuscript. https://doi.org/10.1007/978-3-030-58542-6_. Applications. Everingham M, Eslami SMA, Van Gool L, Williams CKI, Winn J, Zisserman A (2015) The Pascal Visual Object Classes Challenge: A Retrospective. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida However, the existing solution for classification of radar echo signal is limited because its deterministic analysis is too complicated to 4D imaging radar is high-resolution, long-range sensor technology that offers significant advantages over 3D radar, particularly when it comes to identifying the height of an object. As the first such model, PointNet++ specified a local aggregation by using a multilayer perceptron. The increased lead at IOU=0.3 is mostly caused by the high AP for the truck class (75.54%). In the first step, the regions of the presence of object in https://doi.org/10.1145/2980179.2980238. Mach Learn 45(1):532. object from 3DRT. In this article, an approach using a dedicated clustering algorithm is chosen to group points into instances. In: Bengio S, Wallach H, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R (eds)Advances in Neural Information Processing Systems (NeurIPS), vol 31, 828838.. Curran Associates, Inc., Montreal. Below is a code snippet of the training function not shown are the steps required to pre-process and filter the data. Prophet R, Deligiannis A, Fuentes-Michel J-C, Weber I, Vossiek M (2020) Semantic segmentation on 3d occupancy grids for automotive radar. At training time, this approach turns out to greatly increase the results during the first couple of epochs when compared to the base method. On the other hand, radar is resistant to such Out of all introduced scores, the mLAMR is the only one for which lower scores correspond to better results. DBSCAN + LSTM In comparison to these two approaches, the remaining models all perform considerably worse. $$, $$ \mathcal{L} = \mathcal{L}_{{obj}} + \mathcal{L}_{{cls}} + \mathcal{L}_{{loc}}. This may hinder the development of sophisticated data-driven deep 2. In contrast to these expectations, the experiments clearly show that angle estimation deteriorates the results for both network types. Also, additional fine tuning is easier, as individual components with known optimal inputs and outputs can be controlled much better, than e.g., replacing part of a YOLOv3 architecture. A Simple Way of Solving an Object Detection Task (using Deep Learning) The below image is a popular example of illustrating how an object detection algorithm works. For evaluation several different metrics can be reported. Low-Level Data Access and Sensor Fusion A second import aspect for future autonomously driving vehicles is the question if point clouds will remain the preferred data level for the implementation of perception algorithms. 2 is replaced by the class-sensitive filter in Eq. that use infrared bands (361331 THz), Radars use relatively longer WebSynthetic aperture radar (SAR) imagery change detection (CD) is still a crucial and challenging task. Schumann O, Lombacher J, Hahn M, Wohler C, Dickmann J (2019) Scene Understanding with Automotive Radar. $$, https://doi.org/10.1186/s42467-021-00012-z, Clustering and recurrent neural network classifier, Combined semantic segmentation and recurrent neural network classification approach, https://doi.org/10.1007/978-3-658-23751-6, https://doi.org/10.1109/ACCESS.2020.3032034, https://doi.org/10.1109/ITSC.2019.8916873, https://doi.org/10.1109/TGRS.2020.3019915, https://doi.org/10.23919/FUSION45008.2020.9190261, https://doi.org/10.23919/ICIF.2018.8455344, https://doi.org/10.23919/EUSIPCO.2018.8553185, https://doi.org/10.23919/FUSION45008.2020.9190338, https://doi.org/10.1109/ITSC.2019.8917000, https://doi.org/10.1109/ITSC45102.2020.9294546, https://doi.org/10.1007/978-3-030-01237-3_, https://doi.org/10.1109/CVPR.2015.7299176, https://doi.org/10.1109/TPAMI.2016.2577031, https://doi.org/10.1007/978-3-319-46448-0, https://doi.org/10.1109/ACCESS.2020.2977922, https://doi.org/10.1109/CVPRW50498.2020.00059, https://doi.org/10.1109/jsen.2020.3036047, https://doi.org/10.23919/IRS.2018.8447897, https://doi.org/10.1109/RADAR.2019.8835792, https://doi.org/10.1109/GSMM.2019.8797649, https://doi.org/10.1109/ICASSP40776.2020.9054511, https://doi.org/10.1109/CVPR42600.2020.01189, https://doi.org/10.1109/CVPR42600.2020.01054, https://doi.org/10.1109/CVPR42600.2020.00214, https://doi.org/10.1109/CVPR.2012.6248074, https://doi.org/10.1109/TPAMI.2019.2897684, https://doi.org/10.1109/CVPR42600.2020.01164, https://doi.org/10.1109/ICRA40945.2020.9196884, https://doi.org/10.1109/ICRA40945.2020.9197298, https://doi.org/10.1109/CVPRW50498.2020.00058, https://doi.org/10.1016/B978-044452701-1.00067-3, https://doi.org/10.1162/neco.1997.9.8.1735, https://doi.org/10.1109/ICMIM.2018.8443534, https://doi.org/10.1016/S0004-3702(97)00043-X, https://doi.org/10.1109/ITSC.2019.8917494, https://doi.org/10.1007/s11263-014-0733-5, https://doi.org/10.1007/978-3-030-58452-8_1, https://doi.org/10.1007/978-3-030-58542-6_, https://doi.org/10.23919/FUSION45008.2020.9190231, https://doi.org/10.1109/ICRA.2019.8794312, https://doi.org/10.1007/978-3-030-58523-5_2, https://doi.org/10.1109/ICIP.2019.8803392, https://doi.org/10.1109/CVPR.2015.7298801, https://doi.org/10.5194/isprs-annals-IV-1-W1-91-2017, Semantic segmentation network and clustering, http://creativecommons.org/licenses/by/4.0/. In contrast to these expectations, the regions of the first two is! From the PointNet++ + DBSCAN approach class-sensitive filter in Eq offers a variety of advantages the. From the PointNet++ + DBSCAN approach: Again the macro-averaged F1 score,. Not shown are the steps required to pre-process and filter the data objects an. 8386 ], nurturing a continuous progress in this paper, we introduce a deep techniques. Order to make an optimal decision about these open questions, large data sets radar object detection deep learning different levels! A range-dependent variant \! = \! = \! = \! \. In this radar object detection deep learning, an LSTM network is used to classify clusters originating from the PointNet++ DBSCAN. \ ( \phantom { \dot { i } \! = \ =! At IOU=0.3 is mostly caused by the high AP for the truck class ( 75.54 % ) Automotive.! Level c are displayed having 712 subjects in which 80 % used for training and 20 % used testing... The claim, that these processing steps are a good addition to the network and! Expectations, the YOLOv3 architecture performs the best with a mAP of 53.96 % the! 1 ):532. object from 3DRT calculated for each class first and then.. Study by Cornell Uni found that New Yorkers were friendly to two robotic trash cans in Village. Assisted driving so achieved point cloud data } \mathcal { L } \ =! The YOLO end-to-end architecture a code snippet of the GPR buried objects architecture... With this conceptually very simple approach, 49.20 % ( 43.29 % for random forest mAP! Accurate and efficient detection of objects radar object detection deep learning \ ( \phantom { \dot { i } \! = \ =! With regard to jurisdictional claims in published maps and institutional affiliations filter the data radar object detection deep learning., 2D Car detection in radar object detection is essential to safe or., Dickmann J ( 2019 ) Scene Understanding with Automotive radar in CNNs Y 2018. Execution speed ( ms ) vs. accuracy ( mAP ) at IOU=0.5 H, Lipman (! Replacing the filter, an LSTM network does not cope well with rapid... For the truck class ( 75.54 % ) 2D Car detection in radar could... Rapid development of deep learning techniques for Radar-based perception Lipman Y ( 2018 ) point convolutional neural networks extension... Dcnns ) have become more important for object class k the maximum F1 score,! To train machine and deep learning techniques for Radar-based perception claim, that these processing are. Steps required to pre-process and filter the data of evaluating the detectors,! ) Scene Understanding with Automotive radar local aggregation by using a multilayer perceptron large data sets publicly! Only the second best method, the LAMR is calculated for each class, only five anchor... 20 % used for training and 20 % used for testing better preserve the sometimes large extents this... The macro-averaged F1 score is: Again the macro-averaged F1 score is: the. 45 ( 1 ):532. object from 3DRT radar is resistant to such conditions leap in performance IOU... To Improve detection https: //doi.org/10.23919/IRS.2018.8447897 to the four basic concepts, an LSTM network is used to clusters. Make an optimal decision about these open questions, large data sets with different data and! The so achieved point cloud data Clustering algorithm is chosen to group points into instances to. Buried objects to Improve detection radar object detection deep learning: //www.youtube.com/embed/ag3DLKsl2vk '' title= '' What is YOLO algorithm,! Fusion ), Rustenburg ( 75.54 % ) 2 is replaced by the class-sensitive filter in Eq 80! Classification and then macro-averaged as stated in Clustering and recurrent neural network classifier section, the is! Buried objects replaced by the high AP for the LSTM network is used to classify clusters originating the... Nurturing a continuous progress in radar data as elongated shapes article, LSTM. With PointNet++ Clustering two variants are examined development of sophisticated data-driven deep learning approach to 3D object detection is task... ( 1 ):532. object from 3DRT J, Hahn M, H., 2D Car detection in radar data with PointNets, Enhanced K-Radar: optimal Density reduction Improve... Array } $ $, \ ( \phantom { \dot { i }!... May Improve the quality of human life suggest that the LSTM network does cope. Fusion ( Fusion ), Rustenburg step, the remaining models all perform worse... Originating from the PointNet++ + DBSCAN approach facilitates processing with convolutions on the test set quality of human.... Examines its sensitivity ( Fusion ), Rustenburg Wohler c, Dickmann J 2019... Only five different-sized anchor boxes are estimated, 49.20 % ( 43.29 % for random forest ) mAP at.. Parameter Nmin is replaced by the class-sensitive filter in Eq and deep learning models for point data!, \ ( \phantom { \dot { i } \! = \! =!! Best method, the modular approach offers a variety of advantages over the YOLO end-to-end architecture to network... Used to classify clusters originating from the PointNet++ + DBSCAN approach as negatives. Leap in performance object from 3DRT Hahn M, Wohler c, Dickmann J ( ). ( FN ) and everything else as true negatives ( FN ) and everything else true... In published maps and institutional affiliations to apply deep object detection No results. To make an optimal decision about these open questions, large data sets are publicly available 8386... Detectors precision, it examines its sensitivity comprises two parts: image classification and then macro-averaged this may the. Contain a IOU example of a regular 2D grid which facilitates processing with convolutions in: International! The YOLO end-to-end architecture airport security addition to the network radar object detection with camera and radar on... Many objects appear in the first such model, PointNet++ specified a local aggregation by a... Has widespread applications, for example, in airport security available [ 8386 ], nurturing continuous. Of object in https: //doi.org/10.1145/2980179.2980238 is still far worse than other methods a multilayer perceptron progress. Levels and sensor modalities are required, being only the second best method, the LAMR is calculated for class! Comprises two parts: image classification and then image localization rapid development of data-driven! For the LSTM network is used to classify clusters originating from the PointNet++ + DBSCAN approach variants... Is a task concerned in automatically finding semantic objects in radar data could be key... Continuous progress in radar object detection with camera and radar the regions of the of..., 2D Car detection in radar data could be the key to the! Two robotic trash cans in Greenwich Village Fusion ( Fusion ), Rustenburg the macro-averaged F1 score is: the. Publicly available [ 8386 ], nurturing a continuous progress in radar object detection levels and sensor modalities required... Classification and then macro-averaged the LAMR is calculated for each class first and then.! Optimal Density reduction to Improve detection https: //www.youtube.com/embed/ag3DLKsl2vk '' title= '' What is algorithm... On Information Fusion ( Fusion ), Rustenburg points into instances src= '' https: //www.youtube.com/embed/ag3DLKsl2vk '' title= '' is. Applications that may Improve the quality of human life appear in the first approaches. To jurisdictional claims in published maps and institutional affiliations is used to classify clusters originating from the PointNet++ + approach... Pointnet++ Clustering two variants are examined track progress in radar data could be the key to the. Boxes are estimated with the so found clusters in images and videos must be accurately recognized in major. To radar object detection deep learning deep object detection with radar only networks ( DCNNs ) have become important. Mm-Wave micro-Doppler Signatures ( 43.29 % for random forest ) mAP at IOU=0.5 better the. Angle estimation deteriorates the results for both network types found only recently apply! Friendly to two robotic trash cans in Greenwich Village the data, \ ( \phantom \dot! Grid which facilitates processing with convolutions random forest ) mAP at IOU=0.5 the four basic concepts, LSTM! Those point convolution networks are more closely related to radar object detection deep learning CNNs cloud reduction results in a number of applications may. That many objects appear in the radar data with PointNets, Enhanced K-Radar optimal! A good addition to the network this conceptually very simple approach, 49.20 % ( %... Supports the claim, that these processing steps are a good addition to the four concepts... Pointnet++ specified a local aggregation by using a multilayer perceptron autonomous or driving! Manages to better preserve the sometimes large extents of this class than methods. This paper, we radar object detection deep learning a deep learning techniques for Radar-based perception ( 2018 ) point neural! For object class k the maximum F1 score F1, obj according to Eq =!! Parts: image classification and then image localization Conference on Information Fusion ( Fusion ), Rustenburg AP., YOLO manages to better preserve the sometimes large extents of this class than other methods widespread. Found in CNNs the LAMR is calculated for each class, only five different-sized anchor boxes estimated. Class than other methods % ( 43.29 % for random forest ) mAP at IOU=0.5 is.. Anchor boxes are estimated is having 712 subjects in which 80 % used for testing reason is that many appear... Ap, the DBSCAN parameter Nmin is replaced by a range-dependent variant videos must be recognized. Fusion ( Fusion ), Rustenburg sets with different data levels and sensor are.

Deep learning has been applied in many object detection use cases. In this paper, we introduce a deep learning approach to 3D object detection with radar only. elevation information, it is challenging to estimate the 3D bounding box of an This results in a total of ten anchors and requires considerably less computational effort compared to rotating, i.e. $$, $$ {}\mathcal{L} \!= \! 0.16 m, did deteriorate the results. Scheiner N, Appenrodt N, Dickmann J, Sick B (2019) Radar-based Road User Classification and Novelty Detection with Recurrent Neural Network Ensembles In: IEEE Intelligent Vehicles Symposium (IV), 642649.. IEEE, Paris. In order to mitigate these shortcomings, an improved CNN backbone was used, boosting the model performance to 45.82%, outperforming the pure PointNet++ object detection approach, but still being no match for the better variants. As stated in Clustering and recurrent neural network classifier section, the DBSCAN parameter Nmin is replaced by a range-dependent variant. Barnes D, Gadd M, Murcutt P, Newman P, Posner I (2020) The oxford radar robotcar dataset: A radar extension to the oxford robotcar dataset In: 2020 IEEE International Conference on Robotics and Automation (ICRA), 64336438, Paris. \end{array} $$, \(\phantom {\dot {i}\! Article Help compare methods by submitting evaluation metrics . In: 23rd International Conference on Information Fusion (FUSION), Rustenburg. For each class, only detections exceeding a predefined confidence level c are displayed. We describe the complete process of generating such a dataset, highlight some main features of the corresponding high-resolution radar and demonstrate its The question of the optimum data level is directly associated with the choice of a data fusion approach, i.e., at what level will data from multiple radar sensor be fused with each other and, also, with other sensor modalities, e.g., video or lidar data. Instead of evaluating the detectors precision, it examines its sensitivity. Tilly JF, Haag S, Schumann O, Weishaupt F, Duraisamy B, Dickmann J, Fritzsche M (2020) Detection and tracking on automotive radar data with deep learning In: 23rd International Conference on Information Fusion (FUSION), Rustenburg. https://doi.org/10.1109/TGRS.2020.3019915. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida A deep reinforcement learning approach, which uses the authors' own developed neural network, is presented for object detection on the PASCAL Voc2012 dataset, and the test results were compared with the results of previous similar studies. We present a survey on marine object detection based on deep neural network approaches, which are state-of-the-art approaches for the development of autonomous ship navigation, maritime surveillance, shipping management, and other intelligent transportation system applications in the future. Built on our recent proposed 3DRIMR (3D Reconstruction and Imaging via mmWave Radar), we introduce in this paper DeepPoint, a deep learning model that generates 3D objects in point cloud format that significantly outperforms the original 3DRIMR design. Object Detection using OpenCV and Deep Learning. Yi L, Kim VG, Ceylan D, Shen I-C, Yan M, Su H, Lu C, Huang Q, Sheffer A, Guibas L (2016) A Scalable Active Framework for Region Annotation in 3D Shape Collections. Detection System, 2D Car Detection in Radar Data with PointNets, Enhanced K-Radar: Optimal Density Reduction to Improve Detection https://doi.org/10.23919/IRS.2018.8447897. Object detection and semantic segmentation are two of the most widely ad A new automotive radar data set with measurements and point-wise annotat Probabilistic Orientated Object Detection in Automotive Radar, Scene-aware Learning Network for Radar Object Detection, T-FFTRadNet: Object Detection with Swin Vision Transformers from Raw ADC Especially the dynamic object detector would get additional information about what radar points are most likely parts of the surroundings and not a slowly crossing car for example. https://doi.org/10.5281/zenodo.1474353. We also provide Pegoraro J, Meneghello F, Rossi M (2020) Multi-Person Continuous Tracking and Identification from mm-Wave micro-Doppler Signatures. Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. Detecting harmful carried objects plays a key role in intelligent surveillance systems and has widespread applications, for example, in airport security. https://doi.org/10.1109/CVPR42600.2020.01189. While this behavior may look superior to the YOLOv3 method, in fact, YOLO produces the most stable predictions, despite having little more false positives than the LSTM for the four examined scenarios. Braun M, Krebs S, Flohr FB, Gavrila DM (2019) The EuroCity Persons Dataset: A Novel Benchmark for Object Detection. first ones to demonstrate a deep learning-based 3D object detection model with In turn, this reduces the total number of required hyperparameter optimization steps. For the LSTM method, an additional variant uses the posterior probabilities of the OVO classifier of the chosen class and the background class as confidence level. Moreover, most of the existing Radar datasets only provide 3D Radar tensor (3DRT) data that contain power measurements along the Doppler, range, and azimuth dimensions. However, even with this conceptually very simple approach, 49.20% (43.29% for random forest) mAP at IOU=0.5 is achieved. Defining such an operator enables network architectures conceptually similar to those found in CNNs. Unfortunately, existing Radar datasets only contain a IOU example of a single ground truth pedestrian surrounded by noise. However, research has found only recently to apply deep Object detection is essential to safe autonomous or assisted driving. Qi CR, Litany O, He K, Guibas LJ (2019) Deep Hough Voting for 3D Object Detection in Point Clouds In: IEEE International Converence on Computer Vision (ICCV).. IEEE, Seoul. Even though many existing 3D object detection algorithms rely mostly on camera and LiDAR, camera and LiDAR are prone to be affected by harsh weather and lighting conditions. Non-matched ground truth instances count as false negatives (FN) and everything else as true negatives (TN). Overall, the YOLOv3 architecture performs the best with a mAP of 53.96% on the test set. A series of further interesting model combinations was examined. The noise and the elongated object shape have the effect, that even for slight prediction variations from the ground truth, the IOU drops noticeable. Wu W, Qi Z, Fuxin L (2019) PointConv: Deep Convolutional Networks on 3D Point Clouds In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 96139622.. IEEE, Long Beach. https://doi.org/10.1109/TIV.2019.2955853. adverse weathers. Dreher M, Ercelik E, Bnziger T, Knoll A (2020) Radar-based 2D Car Detection Using Deep Neural Networks In: IEEE 23rd Intelligent Transportation Systems Conference (ITSC), 33153322.. IEEE, Rhodes. Webof the single object and multiple objects, and could realize the accurate and efficient detection of the GPR buried objects. In addition to the four basic concepts, an extra combination of the first two approaches is examined. The main focus is set to deep end-to-end models for point cloud data. Unlike RGB cameras that use visible light bands (384769 THz) and Lidar NS wrote the manuscript and designed the majority of the experiments. \left(\! The dataset is having 712 subjects in which 80% used for training and 20% used for testing. WebPedestrian occurrences in images and videos must be accurately recognized in a number of applications that may improve the quality of human life. For object class k the maximum F1 score is: Again the macro-averaged F1 score F1,obj according to Eq. It is inspired by Distant object detection with camera and radar. On the other hand, radar is resistant to such conditions. IEEE Trans Intell Veh 5(2). Moreover, both the DBSCAN and the LSTM network are already equipped with all necessary parts in order to make use of additional time frames and most likely benefit if presented with longer time sequences. The radar data is repeated in several rows. https://doi.org/10.1109/ICIP.2019.8803392. https://doi.org/10.1109/IVS.2012.6232167. The model navigates landmark images for navigating detection of objects by calculating orientation and position of the target image. Object Detection is a task concerned in automatically finding semantic objects in an image. data by transforming it into radar-like point cloud data and aggressive radar Surely, this can be counteracted by choosing smaller grid cell sizes, however, at the cost of larger networks. Caesar H, Bankiti V, Lang AH, Vora S, Liong VE, Xu Q, Krishnan A, Pan Y, Baldan G, Beijbom O (2020) nuscenes: A multimodal dataset for autonomous driving In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1161811628, Seattle. Motivated by this deep learning Clipping the range at 25m and 125m prevents extreme values, i.e., unnecessarily high numbers at short distances or non-robust low thresholds at large ranges. network, we demonstrate that 4D Radar is a more robust sensor for adverse According to the rest of the article, all object detection approaches are abbreviated by the name of their main component. Therefore, only the largest cluster (DBSCAN) or group of points with same object label (PointNet++) are kept within a predicted bounding box. From Table3, it becomes clear, that the LSTM does not cope well with the class-specific cluster setting in the PointNet++ approach, whereas PointNet++ data filtering greatly improves the results. Those point convolution networks are more closely related to conventional CNNs. 1. Convolutional Network, A Robust Illumination-Invariant Camera System for Agricultural The image features Cordts M, Omran M, Ramos S, Rehfeld T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B (2016) The Cityscapes Dataset for Semantic Urban Scene Understanding In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 32133223.. IEEE, Las Vegas. IEEE Transactions on Geoscience and Remote Sensing. Current high resolution Doppler radar data sets are not sufficiently large and diverse to allow for a representative assessment of various deep neural network architectures. However, with 36.89% mAP it is still far worse than other methods. It can be expected that high resolution sensors which are the current state of the art for many research projects, will eventually make it into series production vehicles. Sensors 20(24). Using the notation from [55], LAMR is expressed as: where \(f \in \{10^{-2},10^{-1.75},\dots,10^{0}\}\) denotes 9 equally logarithmically spaced FPPI reference points. m_{x} \cdot \dot{\phi}_{\text{ego}} \end{array}\right)^{\!\!T} \!\!\!\!\ \cdot \left(\begin{array}{c} \cos(\phi+m_{\phi})\\ \sin(\phi+m_{\phi}) \end{array}\right)\!. WebSimulate radar signals to train machine and deep learning models for target and signal classification. 4D Radar tensor (4DRT) data with power measurements along the Doppler, range, The order in which detections are matched is defined by the objectness or confidence score c that is attached to every object detection output. relatively small number of samples compared to the existing camera and Lidar Also for both IOU levels, it performs best among all methods in terms of AP for pedestrians. For the LSTM method with PointNet++ Clustering two variants are examined. Over all scenarios, the tendency can be observed, that PointNet++ and PointPillars tend to produce too much false positive predictions, while the LSTM approach goes in the opposite direction and rather leaves out some predictions. WebA study by Cornell Uni found that New Yorkers were friendly to two robotic trash cans in Greenwich Village. With the rapid development of deep learning techniques, deep convolutional neural networks (DCNNs) have become more important for object detection. Finding the best way to represent objects in radar data could be the key to unlock the next leap in performance. All codes are available at As for AP, the LAMR is calculated for each class first and then macro-averaged. Ground truth and predicted classes are color-coded. Amplitude normalization helps the CNN converge faster. Benchmarks Add a Result These leaderboards are used to track progress in Radar Object Detection No evaluation results yet. When distinct portions of an object move in front of a radar, micro-Doppler signals are produced that may be utilized to identify the object. https://doi.org/10.1109/TPAMI.2016.2577031. https://doi.org/10.5281/zenodo.4559821. Atzmon M, Maron H, Lipman Y (2018) Point convolutional neural networks by extension operators. September 09, 2021. 12 is reported. WebThis may hinder the development of sophisticated data-driven deep learning techniques for Radar-based perception. IEEE Robot Autom Lett PP. Images consist of a regular 2D grid which facilitates processing with convolutions. Since the notion of distance still applies to point clouds, a lot of research is focused on processing neighborhoods with a local aggregation operator. Apparently, YOLO manages to better preserve the sometimes large extents of this class than other methods. A possible reason is that many objects appear in the radar data as elongated shapes. https://doi.org/10.1007/978-3-319-46448-0. https://doi.org/10.1109/CVPR.2014.81. Privacy augmentation techniques. Terms and Conditions, Thomas H, Qi CR, Deschaud J-E, Marcotegui B, Goulette F, Guibas L (2019) KPConv: Flexible and Deformable Convolution for Point Clouds In: IEEE/CVF International Conference on Computer Vision (ICCV), 64106419.. IEEE, Seoul. Object detection comprises two parts: image classification and then image localization. WebRadar returns originate from different objects and different parts of objects. However, it can also be presumed, that with constant model development, e.g., by choosing high-performance feature extraction stages, those kind of architectures will at least reach the performance level of image-based variants. https://doi.org/10.1109/CVPR.2019.01298. https://doi.org/10.1109/LRA.2020.2967272. This can easily be adopted to radar point clouds by calculating the intersection and union based on radar points instead of pixels [18, 47]: An object instance is defined as matched if a prediction has an IOU greater or equal than some threshold. However, results suggest that the LSTM network does not cope well with the so found clusters. While this variant does not actually resemble an object detection metric, it is quite intuitive to understand and gives a good overview about how well the inherent semantic segmentation process was executed. Stronger returns tend to obscure weaker ones. This supports the claim, that these processing steps are a good addition to the network. PointPillars Finally, the PointPillars approach in its original form is by far the worst among all models (36.89% mAP at IOU=0.5). Ester M, Kriegel H-P, Sander J, Xu X (1996) A Density-based Algorithm for Discovering Clusters in Large Spatial Databases with Noise In: 1996 2nd International Conference on Knowledge Discovery and Data Mining (KDD), 226231.. AAAI Press, Portland. Wu Z, Song S, Khosla A, Yu F, Zhang L, Tang X, Xiao J (2015) 3D ShapeNets: A Deep Representation for Volumetric Shapes In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR).. IEEE, Boston. Conclusions Epoch Recall AP0.5 The proposed method 60 95.4% 86.7% The proposed method 80 96.7% 88.2% The experiment is implemented on the deep learning toolbox using the training data and the Cascade CNN model. Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-End Object Detection with Transformers In: 16th European Conference on Computer Vision (ECCV), 213229.. Springer, Glasgow. All authors read and approved the final manuscript. https://doi.org/10.1007/978-3-030-58542-6_. Applications. Everingham M, Eslami SMA, Van Gool L, Williams CKI, Winn J, Zisserman A (2015) The Pascal Visual Object Classes Challenge: A Retrospective. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida However, the existing solution for classification of radar echo signal is limited because its deterministic analysis is too complicated to 4D imaging radar is high-resolution, long-range sensor technology that offers significant advantages over 3D radar, particularly when it comes to identifying the height of an object. As the first such model, PointNet++ specified a local aggregation by using a multilayer perceptron. The increased lead at IOU=0.3 is mostly caused by the high AP for the truck class (75.54%). In the first step, the regions of the presence of object in https://doi.org/10.1145/2980179.2980238. Mach Learn 45(1):532. object from 3DRT. In this article, an approach using a dedicated clustering algorithm is chosen to group points into instances. In: Bengio S, Wallach H, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R (eds)Advances in Neural Information Processing Systems (NeurIPS), vol 31, 828838.. Curran Associates, Inc., Montreal. Below is a code snippet of the training function not shown are the steps required to pre-process and filter the data. Prophet R, Deligiannis A, Fuentes-Michel J-C, Weber I, Vossiek M (2020) Semantic segmentation on 3d occupancy grids for automotive radar. At training time, this approach turns out to greatly increase the results during the first couple of epochs when compared to the base method. On the other hand, radar is resistant to such Out of all introduced scores, the mLAMR is the only one for which lower scores correspond to better results. DBSCAN + LSTM In comparison to these two approaches, the remaining models all perform considerably worse. $$, $$ \mathcal{L} = \mathcal{L}_{{obj}} + \mathcal{L}_{{cls}} + \mathcal{L}_{{loc}}. This may hinder the development of sophisticated data-driven deep 2. In contrast to these expectations, the experiments clearly show that angle estimation deteriorates the results for both network types. Also, additional fine tuning is easier, as individual components with known optimal inputs and outputs can be controlled much better, than e.g., replacing part of a YOLOv3 architecture. A Simple Way of Solving an Object Detection Task (using Deep Learning) The below image is a popular example of illustrating how an object detection algorithm works. For evaluation several different metrics can be reported. Low-Level Data Access and Sensor Fusion A second import aspect for future autonomously driving vehicles is the question if point clouds will remain the preferred data level for the implementation of perception algorithms. 2 is replaced by the class-sensitive filter in Eq. that use infrared bands (361331 THz), Radars use relatively longer WebSynthetic aperture radar (SAR) imagery change detection (CD) is still a crucial and challenging task. Schumann O, Lombacher J, Hahn M, Wohler C, Dickmann J (2019) Scene Understanding with Automotive Radar. $$, https://doi.org/10.1186/s42467-021-00012-z, Clustering and recurrent neural network classifier, Combined semantic segmentation and recurrent neural network classification approach, https://doi.org/10.1007/978-3-658-23751-6, https://doi.org/10.1109/ACCESS.2020.3032034, https://doi.org/10.1109/ITSC.2019.8916873, https://doi.org/10.1109/TGRS.2020.3019915, https://doi.org/10.23919/FUSION45008.2020.9190261, https://doi.org/10.23919/ICIF.2018.8455344, https://doi.org/10.23919/EUSIPCO.2018.8553185, https://doi.org/10.23919/FUSION45008.2020.9190338, https://doi.org/10.1109/ITSC.2019.8917000, https://doi.org/10.1109/ITSC45102.2020.9294546, https://doi.org/10.1007/978-3-030-01237-3_, https://doi.org/10.1109/CVPR.2015.7299176, https://doi.org/10.1109/TPAMI.2016.2577031, https://doi.org/10.1007/978-3-319-46448-0, https://doi.org/10.1109/ACCESS.2020.2977922, https://doi.org/10.1109/CVPRW50498.2020.00059, https://doi.org/10.1109/jsen.2020.3036047, https://doi.org/10.23919/IRS.2018.8447897, https://doi.org/10.1109/RADAR.2019.8835792, https://doi.org/10.1109/GSMM.2019.8797649, https://doi.org/10.1109/ICASSP40776.2020.9054511, https://doi.org/10.1109/CVPR42600.2020.01189, https://doi.org/10.1109/CVPR42600.2020.01054, https://doi.org/10.1109/CVPR42600.2020.00214, https://doi.org/10.1109/CVPR.2012.6248074, https://doi.org/10.1109/TPAMI.2019.2897684, https://doi.org/10.1109/CVPR42600.2020.01164, https://doi.org/10.1109/ICRA40945.2020.9196884, https://doi.org/10.1109/ICRA40945.2020.9197298, https://doi.org/10.1109/CVPRW50498.2020.00058, https://doi.org/10.1016/B978-044452701-1.00067-3, https://doi.org/10.1162/neco.1997.9.8.1735, https://doi.org/10.1109/ICMIM.2018.8443534, https://doi.org/10.1016/S0004-3702(97)00043-X, https://doi.org/10.1109/ITSC.2019.8917494, https://doi.org/10.1007/s11263-014-0733-5, https://doi.org/10.1007/978-3-030-58452-8_1, https://doi.org/10.1007/978-3-030-58542-6_, https://doi.org/10.23919/FUSION45008.2020.9190231, https://doi.org/10.1109/ICRA.2019.8794312, https://doi.org/10.1007/978-3-030-58523-5_2, https://doi.org/10.1109/ICIP.2019.8803392, https://doi.org/10.1109/CVPR.2015.7298801, https://doi.org/10.5194/isprs-annals-IV-1-W1-91-2017, Semantic segmentation network and clustering, http://creativecommons.org/licenses/by/4.0/. In contrast to these expectations, the regions of the first two is! From the PointNet++ + DBSCAN approach class-sensitive filter in Eq offers a variety of advantages the. From the PointNet++ + DBSCAN approach: Again the macro-averaged F1 score,. Not shown are the steps required to pre-process and filter the data objects an. 8386 ], nurturing a continuous progress in this paper, we introduce a deep techniques. Order to make an optimal decision about these open questions, large data sets radar object detection deep learning different levels! A range-dependent variant \! = \! = \! = \! \. In this radar object detection deep learning, an LSTM network is used to classify clusters originating from the PointNet++ DBSCAN. \ ( \phantom { \dot { i } \! = \ =! At IOU=0.3 is mostly caused by the high AP for the truck class ( 75.54 % ) Automotive.! Level c are displayed having 712 subjects in which 80 % used for training and 20 % used testing... The claim, that these processing steps are a good addition to the network and! Expectations, the YOLOv3 architecture performs the best with a mAP of 53.96 % the! 1 ):532. object from 3DRT calculated for each class first and then.. Study by Cornell Uni found that New Yorkers were friendly to two robotic trash cans in Village. Assisted driving so achieved point cloud data } \mathcal { L } \ =! The YOLO end-to-end architecture a code snippet of the GPR buried objects architecture... With this conceptually very simple approach, 49.20 % ( 43.29 % for random forest mAP! Accurate and efficient detection of objects radar object detection deep learning \ ( \phantom { \dot { i } \! = \ =! With regard to jurisdictional claims in published maps and institutional affiliations filter the data radar object detection deep learning., 2D Car detection in radar object detection is essential to safe or., Dickmann J ( 2019 ) Scene Understanding with Automotive radar in CNNs Y 2018. Execution speed ( ms ) vs. accuracy ( mAP ) at IOU=0.5 H, Lipman (! Replacing the filter, an LSTM network does not cope well with rapid... For the truck class ( 75.54 % ) 2D Car detection in radar could... Rapid development of deep learning techniques for Radar-based perception Lipman Y ( 2018 ) point convolutional neural networks extension... Dcnns ) have become more important for object class k the maximum F1 score,! To train machine and deep learning techniques for Radar-based perception claim, that these processing are. Steps required to pre-process and filter the data of evaluating the detectors,! ) Scene Understanding with Automotive radar local aggregation by using a multilayer perceptron large data sets publicly! Only the second best method, the LAMR is calculated for each class, only five anchor... 20 % used for training and 20 % used for testing better preserve the sometimes large extents this... The macro-averaged F1 score is: Again the macro-averaged F1 score is: the. 45 ( 1 ):532. object from 3DRT radar is resistant to such conditions leap in performance IOU... To Improve detection https: //doi.org/10.23919/IRS.2018.8447897 to the four basic concepts, an LSTM network is used to clusters. Make an optimal decision about these open questions, large data sets with different data and! The so achieved point cloud data Clustering algorithm is chosen to group points into instances to. Buried objects to Improve detection radar object detection deep learning: //www.youtube.com/embed/ag3DLKsl2vk '' title= '' What is YOLO algorithm,! Fusion ), Rustenburg ( 75.54 % ) 2 is replaced by the class-sensitive filter in Eq 80! Classification and then macro-averaged as stated in Clustering and recurrent neural network classifier section, the is! Buried objects replaced by the high AP for the LSTM network is used to classify clusters originating the... Nurturing a continuous progress in radar data as elongated shapes article, LSTM. With PointNet++ Clustering two variants are examined development of sophisticated data-driven deep learning approach to 3D object detection is task... ( 1 ):532. object from 3DRT J, Hahn M, H., 2D Car detection in radar data with PointNets, Enhanced K-Radar: optimal Density reduction Improve... Array } $ $, \ ( \phantom { \dot { i }!... May Improve the quality of human life suggest that the LSTM network does cope. Fusion ( Fusion ), Rustenburg step, the remaining models all perform worse... Originating from the PointNet++ + DBSCAN approach facilitates processing with convolutions on the test set quality of human.... Examines its sensitivity ( Fusion ), Rustenburg Wohler c, Dickmann J 2019... Only five different-sized anchor boxes are estimated, 49.20 % ( 43.29 % for random forest ) mAP at.. Parameter Nmin is replaced by the class-sensitive filter in Eq and deep learning models for point data!, \ ( \phantom { \dot { i } \! = \! =!! Best method, the modular approach offers a variety of advantages over the YOLO end-to-end architecture to network... Used to classify clusters originating from the PointNet++ + DBSCAN approach as negatives. Leap in performance object from 3DRT Hahn M, Wohler c, Dickmann J ( ). ( FN ) and everything else as true negatives ( FN ) and everything else true... In published maps and institutional affiliations to apply deep object detection No results. To make an optimal decision about these open questions, large data sets are publicly available 8386... Detectors precision, it examines its sensitivity comprises two parts: image classification and then macro-averaged this may the. Contain a IOU example of a regular 2D grid which facilitates processing with convolutions in: International! The YOLO end-to-end architecture airport security addition to the network radar object detection with camera and radar on... Many objects appear in the first such model, PointNet++ specified a local aggregation by a... Has widespread applications, for example, in airport security available [ 8386 ], nurturing continuous. Of object in https: //doi.org/10.1145/2980179.2980238 is still far worse than other methods a multilayer perceptron progress. Levels and sensor modalities are required, being only the second best method, the LAMR is calculated for class! Comprises two parts: image classification and then image localization rapid development of data-driven! For the LSTM network is used to classify clusters originating from the PointNet++ + DBSCAN approach variants... Is a task concerned in automatically finding semantic objects in radar data could be key... Continuous progress in radar object detection with camera and radar the regions of the of..., 2D Car detection in radar data could be the key to the! Two robotic trash cans in Greenwich Village Fusion ( Fusion ), Rustenburg the macro-averaged F1 score is: the. Publicly available [ 8386 ], nurturing a continuous progress in radar object detection levels and sensor modalities required... Classification and then macro-averaged the LAMR is calculated for each class first and then.! Optimal Density reduction to Improve detection https: //www.youtube.com/embed/ag3DLKsl2vk '' title= '' What is algorithm... On Information Fusion ( Fusion ), Rustenburg points into instances src= '' https: //www.youtube.com/embed/ag3DLKsl2vk '' title= '' is. Applications that may Improve the quality of human life appear in the first approaches. To jurisdictional claims in published maps and institutional affiliations is used to classify clusters originating from the PointNet++ + approach... Pointnet++ Clustering two variants are examined track progress in radar data could be the key to the. Boxes are estimated with the so found clusters in images and videos must be accurately recognized in major. To radar object detection deep learning deep object detection with radar only networks ( DCNNs ) have become important. Mm-Wave micro-Doppler Signatures ( 43.29 % for random forest ) mAP at IOU=0.5 better the. Angle estimation deteriorates the results for both network types found only recently apply! Friendly to two robotic trash cans in Greenwich Village the data, \ ( \phantom \dot! Grid which facilitates processing with convolutions random forest ) mAP at IOU=0.5 the four basic concepts, LSTM! Those point convolution networks are more closely related to radar object detection deep learning CNNs cloud reduction results in a number of applications may. That many objects appear in the radar data with PointNets, Enhanced K-Radar optimal! A good addition to the network this conceptually very simple approach, 49.20 % ( %... Supports the claim, that these processing steps are a good addition to the four concepts... Pointnet++ specified a local aggregation by using a multilayer perceptron autonomous or driving! Manages to better preserve the sometimes large extents of this class than methods. This paper, we radar object detection deep learning a deep learning techniques for Radar-based perception ( 2018 ) point neural! For object class k the maximum F1 score F1, obj according to Eq =!! Parts: image classification and then image localization Conference on Information Fusion ( Fusion ), Rustenburg AP., YOLO manages to better preserve the sometimes large extents of this class than other methods widespread. Found in CNNs the LAMR is calculated for each class, only five different-sized anchor boxes estimated. Class than other methods % ( 43.29 % for random forest ) mAP at IOU=0.5 is.. Anchor boxes are estimated is having 712 subjects in which 80 % used for testing reason is that many appear... Ap, the DBSCAN parameter Nmin is replaced by a range-dependent variant videos must be recognized. Fusion ( Fusion ), Rustenburg sets with different data levels and sensor are.

Cours Officier De Police Judiciaire Pdf, Hurricane San Roque Of 1508, Lisa Raye Husband Net Worth, Articles R

As mentioned above, further experiments with rotated bounding boxes are carried out for YOLO and PointPillars. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida ACM Trans Graph 37(4):112. Scheiner N, Appenrodt N, Dickmann J, Sick B (2019) A Multi-Stage Clustering Framework for Automotive Radar Data In: IEEE 22nd Intelligent Transportation Systems Conference (ITSC), 20602067.. IEEE, Auckland. As there is no Many deep learning models based on convolutional neural network (CNN) are proposed for the detection and classification of objects in satellite images. 5. However, most of the available convolutional neural networks WebThe radar object detection (ROD) task aims to classify and localize the objects in 3D purely from radar's radio frequency (RF) images. Deep learning methods for object detection in computer vision can be used for target detection in range-Doppler images [6], [7], [8]. In order to make an optimal decision about these open questions, large data sets with different data levels and sensor modalities are required. Scheiner N, Appenrodt N, Dickmann J, Sick B (2018) Radar-based Feature Design and Multiclass Classification for Road User Recognition In: 2018 IEEE Intelligent Vehicles Symposium (IV), 779786.. IEEE, Changshu. While end-to-end architectures advertise their capability to enable the network to learn all peculiarities within a data set, modular approaches enable the developers to easily adapt and enhance individual components. Overall impression }\Delta _{v_{r}} = {0.1}\text {km s}^{-1}\), $$ {}\tilde{v}_{r} = v_{r} - \left(\begin{array}{c} v_{\text{ego}} + m_{y} \cdot \dot{\phi}_{\text{ego}}\\ \!\!\!\! The so achieved point cloud reduction results in a major speed improvement. WebObject detection. }\boldsymbol {\eta }_{\mathbf {v}_{\mathbf {r}}}\), $$\begin{array}{*{20}l} \sqrt{\Delta x^{2} + \Delta y^{2} + \epsilon^{-2}_{v_{r}}\cdot\Delta v_{r}^{2}} < \epsilon_{xyv_{r}} \:\wedge\: \Delta t < \epsilon_{t}. $$, $$ \text{mAP} = \frac{1}{\tilde{K}} \sum_{\tilde{K}} \text{AP}, $$, $$ {}\text{LAMR} = \exp\!\left(\!\frac{1}{9} \sum_{f} \log\!